Введение

Целью данной работы ставилось построение демо стенда для изучения возможностей Oracle Data Guard из узлов Oracle RAC 12.1.0.2.0.

Так как под рукой у меня не нашлось сервера, на котором я бы мог разместить все необходимые мне виртуальные машины (7 штук), то строить будем с использованием офисных PC.

Итого имеем:

- 3 PC с такими характеристиками: CPU i5, 16 GB RAM

- Обычная офисная сеть 1Gbit/s

На двух PC разместятся два сайта Oracle Data Guard, в каждом из которых по 2 узла Oracle RAC 12c и один Far Sync экземпляр в отдельных виртульных машинах.

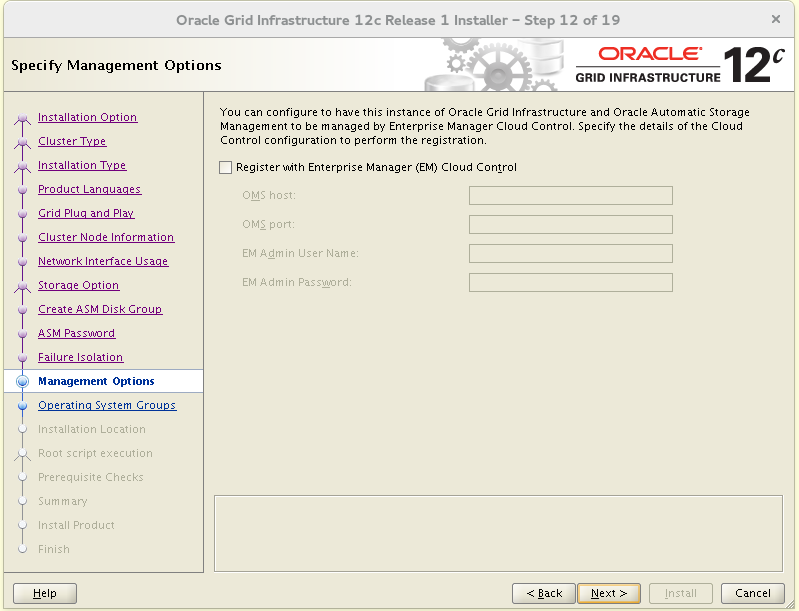

На третьем PC разместится одна виртуалка управления с Oracle Enterprise Manager Cloud Control 12c Release 5 (12.1.0.5). Насчет EM — дальше я о нем упоминать не буду ввиду того, что это отдельная тема в данном случае больше связанная не с построением стенда Data Guard, а с его использованием.

Необходимое программное обеспечение Oracle скачиваем с их сайта, а в качестве основной операционной системы я выбрал Fedora 22 с qemu-kvm + libvirt + openvswitch. В качестве гостевой ОС используем Oracle Linux 6.6.

Подготовка Fedora для хостинга виртуальных машин

Каждая из виртуальных машин будет иметь по 4 сетевых интерфейса. Назначение сетей:

| 192.168.100.0/24 | #VLAN 100 Public Clusterware network |

| 192.168.101.0/24 | #VLAN 101 Interconnect 1 |

| 192.168.102.0/24 | #VLAN 101 Interconnect 2 |

| 192.168.103.0/24 | #VLAN 103 Data Guard |

Interconnect кластера будет у нас дублирован, для трафика Data Guard выделена отдельная сеть. Есть еще внешняя сеть и один порт Open vSwitch на каждом PC использует физический интерфейс, которой получает IP адрес по DHCP из этой сети.

Распределим IP адреса следующим образом:

# cat /etc/hosts

| 192.168.100.1 | prmy.example.com | prmy | # PC1 Primary RAC Database |

| 192.168.100.2 | sby.example.com | sby | # PC2 Standy RAC Database |

| 192.168.100.3 | em.example.com | em | # PC3 EM 12c |

| 192.168.100.11 | prmy1.example.com | prmy1 | # PC1 VM1 Node 1 Primary RAC Database |

| 192.168.100.12 | prmy2.example.com | prmy2 | # PC1 VM2 Node 2 Primary RAC Database |

| 192.168.100.13 | prmy3.example.com | prmy3 | # PC1 VM3 Primary Far Sync |

| 192.168.100.21 | sby1.example.com | sby1 | # PC2 VM1 Node 1 Standy RAC Database |

| 192.168.100.22 | sby2.example.com | sby2 | # PC2 VM2 Node 2 Standy RAC Database |

| 192.168.100.23 | sby3.example.com | sby3 | # PC2 VM3 Standy Far Sync |

сформируем имена на каждом из компьютеров:

[root@prmy ~]# hostnamectl set-hostname prmy.example.com [root@sby ~]# hostnamectl set-hostname sby.example.com [root@em ~]# hostnamectl set-hostname em.example.com Избавляемся от GNOME на каждой из персоналок:

# systemctl set-default multi-user.target Сконфигурируем HugeMem для виртуалок из расчета 4300M на каждую.

# echo "vm.nr_hugepages=6450" > /etc/sysctl.d/hugepages.conf Включим маршрутизацию:

# echo "net.ipv4.ip_forward = 1" > /etc/sysctl.d/forward.conf Кластерам нужна синхронизация времени, поэтому на prmy конфигурируем chronyd:

[root@prmy ~]# echo "allow 192.168.100/24" >> /etc/chrony.conf [root@prmy ~]# systemctl enable chronyd На prmy конфигурируем DHCP сервер для раздачи IP адресов виртуалкам:

root@prmy ~]# dnf install dhcp [root@prmy ~]# systemctl enable dhcpd [root@prmy ~]# vi /etc/dhcp/dhcpd.conf

# # DHCP Server Configuration file. # see /usr/share/doc/dhcp/dhcpd.conf.example # see dhcpd.conf(5) man page # authoritative; option domain-name "example.com"; ddns-domainname "example.com"; ddns-rev-domainname "in-addr.arpa."; ddns-update-style interim; ddns-updates on; ignore client-updates; default-lease-time 21600; max-lease-time 43200; # Public subnet 192.168.100.0 netmask 255.255.255.0 { option routers 192.168.100.1; option subnet-mask 255.255.255.0; option broadcast-address 192.168.100.255; option domain-name-servers 192.168.100.1; option ntp-servers 192.168.100.1; option ip-forwarding on; pool { range 192.168.100.100 192.168.100.254; } group { host prmy1 { hardware ethernet 00:16:3e:00:01:01; fixed-address 192.168.100.11; } host prmy2 { hardware ethernet 00:16:3e:00:01:02; fixed-address 192.168.100.12; } host prmy3 { hardware ethernet 00:16:3e:00:01:03; fixed-address 192.168.100.13; } host sby1 { hardware ethernet 00:16:3e:00:02:01; fixed-address 192.168.100.21; } host sby2 { hardware ethernet 00:16:3e:00:02:02; fixed-address 192.168.100.22; } host sby3 { hardware ethernet 00:16:3e:00:02:03; fixed-address 192.168.100.23; } } } # Interconnect 1 subnet 192.168.101.0 netmask 255.255.255.0 { option subnet-mask 255.255.255.0; option broadcast-address 192.168.101.255; range 192.168.101.100 192.168.101.254; } # Interconnect 2 subnet 192.168.102.0 netmask 255.255.255.0 { option subnet-mask 255.255.255.0; option broadcast-address 192.168.102.255; range 192.168.102.100 192.168.102.254; } # Data Guard subnet 192.168.103.0 netmask 255.255.255.0 { option subnet-mask 255.255.255.0; option broadcast-address 192.168.103.255; pool { range 192.168.103.100 192.168.103.254; } group { host prmy1-dg { hardware ethernet 00:16:3e:00:01:31; fixed-address 192.168.103.11; } host prmy2-dg { hardware ethernet 00:16:3e:00:01:32; fixed-address 192.168.103.12; } host prmy3-dg { hardware ethernet 00:16:3e:00:01:33; fixed-address 192.168.103.13; } host sby1-dg { hardware ethernet 00:16:3e:00:02:31; fixed-address 192.168.103.21; } host sby2-dg { hardware ethernet 00:16:3e:00:02:32; fixed-address 192.168.103.22; } host sby3-dg { hardware ethernet 00:16:3e:00:02:33; fixed-address 192.168.103.23; } } }

Настраиваем DNS

[root@prmy ~]# dnf install bind [root@prmy ~]# systemctl enable named В стандартный /etc/named.conf добавим следующие стрчки:

[root@prmy ~]# vi /etc/named.conf

listen-on port 53 { 192.168.100.1; }; allow-query { localhost; 192.168.100.0/24; }; allow-query-cache { localhost; 192.168.100.0/24; }; allow-recursion { localhost; 192.168.100.0/24; }; zone "example.com" { type master; file "dynamic/example.zone"; allow-update { key "rndc-key"; }; notify yes; }; zone "100.168.192.in-addr.arpa" IN { type master; file "dynamic/192.168.100.zone"; allow-update { key "rndc-key"; }; notify yes; }; zone "103.168.192.in-addr.arpa" IN { type master; file "dynamic/192.168.103.zone"; allow-update { key "rndc-key"; }; notify yes; };

Создаем зоны:

[root@prmy ~]# vi /var/named/dynamic/example.zone

$ORIGIN . $TTL 86400 ; 1 day example.com IN SOA dns.example.com. sysadmin.example.com. ( 59 ; serial 28800 ; refresh (8 hours) 7200 ; retry (2 hours) 2419200 ; expire (4 weeks) 86400 ; minimum (1 day) ) IN NS dns.example.com. $ORIGIN example.com. $TTL 10800 ; 3 hours $TTL 86400 ; 1 day dns A 192.168.100.1 prmy CNAME dns prmy1 A 192.168.100.11 prmy2 A 192.168.100.12 prmy3 A 192.168.100.13 sby A 192.168.100.2 sby1 A 192.168.100.21 sby2 A 192.168.100.22 sby3 A 192.168.100.23 prmy1-dg A 192.168.103.11 prmy2-dg A 192.168.103.12 prmy3-dg A 192.168.103.13 sby1-dg A 192.168.103.21 sby2-dg A 192.168.103.22 sby3-dg A 192.168.103.23 em A 192.168.100.3 clu-prmy-gns A 192.168.100.51 clu-prmy NS clu-prmy-gns.example.com.

[root@prmy ~]# vi /var/named/dynamic/192.168.100.zone

$ORIGIN . $TTL 86400 ; 1 day 100.168.192.in-addr.arpa IN SOA dns.example.com. sysadmin.example.com. ( 40 ; serial 28800 ; refresh (8 hours) 7200 ; retry (2 hours) 2419200 ; expire (4 weeks) 86400 ; minimum (1 day) ) NS dns.example.com. $ORIGIN 100.168.192.in-addr.arpa. 1 PTR dns.example.com. 11 PTR prmy1.example.com. 12 PTR prmy2.example.com. 13 PTR prmy3.example.com. 2 PTR sby.example.com. 21 PTR sby1.example.com. 22 PTR sby2.example.com. 23 PTR sby3.example.com. 3 PTR em.example.com. 51 PTR clu-prmy-gns.example.com.

[root@prmy ~]# vi /var/named/dynamic/192.168.103.zone

$ORIGIN . $TTL 86400 ; 1 day 103.168.192.in-addr.arpa IN SOA dns.example.com. sysadmin.example.com. ( 42 ; serial 28800 ; refresh (8 hours) 7200 ; retry (2 hours) 2419200 ; expire (4 weeks) 86400 ; minimum (1 day) ) NS dns.example.com. $ORIGIN 103.168.192.in-addr.arpa. 11 PTR prmy1-dg.example.com. 12 PTR prmy2-dg.example.com. 13 PTR prmy3-dg.example.com. 21 PTR sby1-dg.example.com. 22 PTR sby2-dg.example.com. 23 PTR sby3-dg.example.com.

[root@prmy ~]# chown named:named /var/named/dynamic/* У нас будет свои DHCP и DNS сервера и чтобы не мешать остальной офисной сети мы сконфигурируем для работы стенда свои подсети в отдельных VLAN.

Устанавливаем Open vSwitch:

# dnf install openvswitch # systemctl enable openvswitch # systemctl disable NetworkManager # systemctl enable network Создаем отдельный свич для public сети кластера:

# vi /etc/sysconfig/network-scripts/ifcfg-ovsbr0 DEVICE=ovsbr0 DEVICETYPE=ovs TYPE=OVSBridge ONBOOT=yes OVSBOOTPROTO="dhcp" OVSDHCPINTERFACES="enp3s0" DELAY=0 HOTPLUG=no NOZEROCONF=yes Вместе с мостом создается внутренний порт и на нем внутренний интерфейс по имени совпадающем с имением моста ovsbr0. Этот интерфейс будет получать IP адрес с офисного DHCP через физический интерфейс enp3s0.

В свою очередь физический интерфейс enp3s0 подключим к этому мосту:

# vi /etc/sysconfig/network-scripts/ifcfg-enp3s0 DEVICE="enp3s0" DEVICETYPE=ovs TYPE=OVSPort OVS_BRIDGE=ovsbr0 ONBOOT=yes HWADDR=60:a4:4c:3c:93:06 IPV4_FAILURE_FATAL=no IPV6INIT=no NM_CONTROLLED=no USERCTL=no NOZEROCONF=yes HOTPLUG=no Конфигурируем порт (VLAN 100) и интерфейс на Open vSwitch для public сети кластеров. Через него будем раздавать IP адреса, DNS и NTP для вируалок кластеров и EM 12c.

# vi /etc/sysconfig/network-scripts/ifcfg-pub0 DEVICE=pub0 TYPE="OVSIntPort" DEVICETYPE="ovs" OVS_BRIDGE="ovsbr0" OVS_OPTIONS="tag=100" OVS_EXTRA="set Interface $DEVICE external-ids:iface-id=$(hostname -s)-$DEVICE-vif" ONBOOT=yes BOOTPROTO=static IPADDR=192.168.100.1 PREFIX=24 IPV6INIT=no DELAY=0 HOTPLUG=no NOZEROCONF=yes Конфигурируем отдельный свич, порт (VLAN 101) и интерфейс на Open vSwitch для первого inetrconnect’а кластера.

# vi /etc/sysconfig/network-scripts/ifcfg-iconn1 DEVICE=iconn1 DEVICETYPE=ovs TYPE=OVSBridge OVS_EXTRA="set port $DEVICE tag=101" ONBOOT=yes BOOTPROTO=none IPADDR=192.168.101.1 PREFIX=24 DELAY=0 HOTPLUG=no NOZEROCONF=yes Конфигурируем отдельный свич, порт (VLAN 102) и интерфейс на Open vSwitch для второго inetrconnect’а кластеров.

# vi /etc/sysconfig/network-scripts/ifcfg-iconn2 DEVICE=iconn2 DEVICETYPE=ovs TYPE=OVSBridge OVS_EXTRA="set port $DEVICE tag=102" ONBOOT=yes BOOTPROTO=none IPADDR=192.168.102.1 PREFIX=24 DELAY=0 HOTPLUG=no NOZEROCONF=yes Конфигурируем отдельный свич, порт (VLAN 103) и интерфейс на Open vSwitch для трафика Data Guard.

# vi /etc/sysconfig/network-scripts/ifcfg-dg0 DEVICE=dg0 DEVICETYPE=ovs TYPE=OVSBridge OVS_EXTRA="set port $DEVICE tag=103" ONBOOT=yes BOOTPROTO=none IPADDR=192.168.103.1 PREFIX=24 DELAY=0 HOTPLUG=no NOZEROCONF=yes Создаем такие же определения интерфейсов на sby меняя HWADDR на актуальные и последнюю цифру в IP адресах для sby на 2.

Свичи iconn1, iconn2 и dg0 получились у нас изолированными и их трафик не выходит наружу. Для того чтобы виртуальные машины на prmy могли обмениваться данными по всем внутренним сетям с виртуальными машинами на sby и наоборот, мы подключим эти свичи к ovsbr0, который имеет внешний физический порт.

Реализуем это соединением всех свичей «паровозиком» при помощи Patch портов на свичах.

Определения следующих интерфейсов идентичны на prmy и sby:

# vi /etc/sysconfig/network-scripts/ifcfg-patch-ovsbr0-iconn1 DEVICE=patch-ovsbr0-iconn1 ONBOOT=yes DEVICETYPE=ovs TYPE=OVSPatchPort OVS_BRIDGE=ovsbr0 OVS_PATCH_PEER=patch-iconn1-ovsbr0 # vi /etc/sysconfig/network-scripts/ifcfg-patch-iconn1-ovsbr0 DEVICE=patch-iconn1-ovsbr0 ONBOOT=yes DEVICETYPE=ovs TYPE=OVSPatchPort OVS_BRIDGE=iconn1 OVS_PATCH_PEER=patch-ovsbr0-iconn1 # vi /etc/sysconfig/network-scripts/ifcfg-patch-iconn1-iconn2 DEVICE=patch-iconn1-iconn2 ONBOOT=yes DEVICETYPE=ovs TYPE=OVSPatchPort OVS_BRIDGE=iconn1 OVS_PATCH_PEER=patch-iconn2-iconn1 # vi /etc/sysconfig/network-scripts/ifcfg-patch-iconn2-iconn1 DEVICE=patch-iconn2-iconn1 ONBOOT=yes DEVICETYPE=ovs TYPE=OVSPatchPort OVS_BRIDGE=iconn2 OVS_PATCH_PEER=patch-iconn1-iconn2 # vi /etc/sysconfig/network-scripts/ifcfg-patch-iconn2-dg0 DEVICE=patch-iconn2-dg0 ONBOOT=yes DEVICETYPE=ovs TYPE=OVSPatchPort OVS_BRIDGE=iconn2 OVS_PATCH_PEER=patch-dg0-iconn2 # vi /etc/sysconfig/network-scripts/ifcfg-patch-dg0-iconn2 DEVICE=patch-dg0-iconn2 ONBOOT=yes DEVICETYPE=ovs TYPE=OVSPatchPort OVS_BRIDGE=dg0 OVS_PATCH_PEER=patch-iconn2-dg0

Теперь перезагружаем prmy и sby.

Проверяем получившуюся конфигурацию openvswitch:

[root@prmy ~]# ovs-vsctl show

3d20f852-5b67-4a1c-b983-e2a8caa27de1 Bridge "dg0" Port "patch-dg0-iconn2" Interface "patch-dg0-iconn2" type: patch options: {peer="patch-iconn2-dg0"} Port "dg0" tag: 103 Interface "dg0" type: internal Bridge "iconn1" Port "iconn1" tag: 101 Interface "iconn1" type: internal Port "patch-iconn1-iconn2" Interface "patch-iconn1-iconn2" type: patch options: {peer="patch-iconn2-iconn1"} Port "patch-iconn1-ovsbr0" Interface "patch-iconn1-ovsbr0" type: patch options: {peer="patch-ovsbr0-iconn1"} Bridge "iconn2" Port "iconn2" tag: 102 Interface "iconn2" type: internal Port "patch-iconn2-iconn1" Interface "patch-iconn2-iconn1" type: patch options: {peer="patch-iconn1-iconn2"} Port "patch-iconn2-dg0" Interface "patch-iconn2-dg0" type: patch options: {peer="patch-dg0-iconn2"} Bridge "ovsbr0" Port "pub0" tag: 100 Interface "pub0" type: internal Port "ovsbr0" Interface "ovsbr0" type: internal Port "enp3s0" Interface "enp3s0" Port "patch-ovsbr0-iconn1" Interface "patch-ovsbr0-iconn1" type: patch options: {peer="patch-iconn1-ovsbr0"} ovs_version: "2.3.2"

Конфигурация должна быть одинаковой на всех компьютерах.

Проверяем IP адреса:

[root@prmy ~]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: enp3s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel master ovs-system state UP group default qlen 1000 link/ether 60:a4:4c:3c:93:06 brd ff:ff:ff:ff:ff:ff inet6 fe80::62a4:4cff:fe3c:9306/64 scope link valid_lft forever preferred_lft forever 3: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default link/ether 0a:91:1b:82:ce:a9 brd ff:ff:ff:ff:ff:ff 4: iconn1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default link/ether d6:22:60:7e:9f:48 brd ff:ff:ff:ff:ff:ff inet 192.168.101.1/24 brd 192.168.101.255 scope global iconn1 valid_lft forever preferred_lft forever inet6 fe80::d422:60ff:fe7e:9f48/64 scope link valid_lft forever preferred_lft forever 5: ovsbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default link/ether 60:a4:4c:3c:93:06 brd ff:ff:ff:ff:ff:ff inet 192.168.118.16/26 brd 192.168.118.63 scope global dynamic ovsbr0 valid_lft 62646sec preferred_lft 62646sec inet6 fe80::62a4:4cff:fe3c:9306/64 scope link valid_lft forever preferred_lft forever 6: pub0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default link/ether f2:c2:ba:85:a2:6e brd ff:ff:ff:ff:ff:ff inet 192.168.100.1/24 brd 192.168.100.255 scope global pub0 valid_lft forever preferred_lft forever inet6 fe80::f0c2:baff:fe85:a26e/64 scope link valid_lft forever preferred_lft forever 7: iconn2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default link/ether f2:e6:e8:93:f0:43 brd ff:ff:ff:ff:ff:ff inet 192.168.102.1/24 brd 192.168.102.255 scope global iconn2 valid_lft forever preferred_lft forever inet6 fe80::f0e6:e8ff:fe93:f043/64 scope link valid_lft forever preferred_lft forever 8: dg0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default link/ether ae:bb:09:25:5c:4e brd ff:ff:ff:ff:ff:ff inet 192.168.103.1/24 brd 192.168.103.255 scope global dg0 valid_lft forever preferred_lft forever inet6 fe80::acbb:9ff:fe25:5c4e/64 scope link valid_lft forever preferred_lft forever [root@sby ~]# ip addr show 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: enp3s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel master ovs-system state UP group default qlen 1000 link/ether 10:bf:48:e2:58:5e brd ff:ff:ff:ff:ff:ff inet6 fe80::12bf:48ff:fee2:585e/64 scope link valid_lft forever preferred_lft forever 3: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default link/ether 66:9a:f8:af:48:b4 brd ff:ff:ff:ff:ff:ff 4: iconn1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default link/ether da:85:ce:d4:36:4f brd ff:ff:ff:ff:ff:ff inet 192.168.101.2/24 brd 192.168.101.255 scope global iconn1 valid_lft forever preferred_lft forever inet6 fe80::d885:ceff:fed4:364f/64 scope link valid_lft forever preferred_lft forever 5: ovsbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default link/ether 10:bf:48:e2:58:5e brd ff:ff:ff:ff:ff:ff inet 192.168.118.28/26 brd 192.168.118.63 scope global dynamic ovsbr0 valid_lft 64250sec preferred_lft 64250sec inet6 fe80::12bf:48ff:fee2:585e/64 scope link valid_lft forever preferred_lft forever 6: pub0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default link/ether d6:2e:c7:82:d5:8c brd ff:ff:ff:ff:ff:ff inet 192.168.100.2/24 brd 192.168.100.255 scope global pub0 valid_lft forever preferred_lft forever inet6 fe80::d42e:c7ff:fe82:d58c/64 scope link valid_lft forever preferred_lft forever 7: iconn2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default link/ether aa:e2:ca:f4:76:4c brd ff:ff:ff:ff:ff:ff inet 192.168.102.2/24 brd 192.168.102.255 scope global iconn2 valid_lft forever preferred_lft forever inet6 fe80::a8e2:caff:fef4:764c/64 scope link valid_lft forever preferred_lft forever 8: dg0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default link/ether ca:cc:91:bd:20:47 brd ff:ff:ff:ff:ff:ff inet 192.168.103.2/24 brd 192.168.103.255 scope global dg0 valid_lft forever preferred_lft forever inet6 fe80::c8cc:91ff:febd:2047/64 scope link valid_lft forever preferred_lft forever 9: vnet0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel master ovs-system state UNKNOWN group default qlen 500 link/ether fe:16:3e:00:02:01 brd ff:ff:ff:ff:ff:ff inet6 fe80::fc16:3eff:fe00:201/64 scope link valid_lft forever preferred_lft forever 10: vnet1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel master ovs-system state UNKNOWN group default qlen 500 link/ether fe:16:3e:00:02:11 brd ff:ff:ff:ff:ff:ff inet6 fe80::fc16:3eff:fe00:211/64 scope link valid_lft forever preferred_lft forever 11: vnet2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel master ovs-system state UNKNOWN group default qlen 500 link/ether fe:16:3e:00:02:21 brd ff:ff:ff:ff:ff:ff inet6 fe80::fc16:3eff:fe00:221/64 scope link valid_lft forever preferred_lft forever 12: vnet3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel master ovs-system state UNKNOWN group default qlen 500 link/ether fe:16:3e:00:02:31 brd ff:ff:ff:ff:ff:ff inet6 fe80::fc16:3eff:fe00:231/64 scope link valid_lft forever preferred_lft forever

Проверяем что ping есть на все адреса.

Создание виртуальных машин

Готовим libvirt:

# systemctl start libvirtd # systemctl enable libvirtd Удаляем default сеть libvirt:

# virsh net-destroy default # virsh net-undefine default Создаем свои определения сетей:

#vi ovsbr0.xml

<network> <name>public</name> <forward mode='bridge'/> <bridge name='ovsbr0'/> <virtualport type='openvswitch'/> <portgroup name='public' default='yes'> <vlan> <tag id='100'/> </vlan> </portgroup> </network>

# virsh net-define ovsbr0.xml # virsh net-start public # virsh net-autostart public

#vi interconnect1.xml

<network> <name>interconnect1</name> <forward mode='bridge'/> <bridge name='iconn1'/> <virtualport type='openvswitch'/> <portgroup name='interconnect1' default='yes'> <vlan> <tag id='101'/> </vlan> </portgroup> </network>

# virsh net-define interconnect1.xml # virsh net-start interconnect1 # virsh net-autostart interconnect1

#vi interconnect2.xml

<network> <name>interconnect2</name> <forward mode='bridge'/> <bridge name='iconn2'/> <virtualport type='openvswitch'/> <portgroup name='interconnect2' default='yes'> <vlan> <tag id='102'/> </vlan> </portgroup> </network>

# virsh net-define interconnect2.xml # virsh net-start interconnect2 # virsh net-autostart interconnect2

#vi dataguard.xml

<network> <name>dataguard</name> <forward mode='bridge'/> <bridge name='dg0'/> <virtualport type='openvswitch'/> <portgroup name='dataguard' default='yes'> <vlan> <tag id='103'/> </vlan> </portgroup> </network>

# virsh net-define dataguard.xml # virsh net-start dataguard # virsh net-autostart dataguard Проверяем:

# virsh net-list Name State Autostart Persistent ---------------------------------------------------------- dataguard active yes yes interconnect1 active yes yes interconnect2 active yes yes public active yes yes Создаем диски для prmy1:

[root@prmy ~]# mkdir /var/lib/libvirt/images/prmy1 [root@prmy ~]# qemu-img create -f qcow2 /var/lib/libvirt/images/prmy1/system.qcow2 20G [root@prmy ~]# qemu-img create -f qcow2 /var/lib/libvirt/images/prmy1/u01.qcow2 16G Заставить правильно работать qemu+kvm с общими дисками в формате qcow2 мне не удалось, поэтому общие диски создаем в формате raw.

[root@prmy ~]# qemu-img create -f raw /var/lib/libvirt/images/df.img 33G [root@prmy ~]# qemu-img create -f raw /var/lib/libvirt/images/dg.img 11G Берем дистрибутив Oracle Linux 6.6 64bit и кладем его на место:

[root@prmy ~]# cp V52218-01.iso /var/lib/libvirt/images Конфигурируем NFS сервер:

[root@prmy ~]# mkdir /stage [root@prmy ~]# echo '/stage 192.168.100.0/24(ro)' > /etc/exports.d/stage.exports [root@prmy ~]# systemctl start nfs-server [root@prmy ~]# systemctl enable nfs-server В /stage скачиваем дистрибутивы Grid Infrastructure, Oracle Database 12.1.0.2 и распаковываем их.

[root@prmy stage]# cd /stage [root@prmy ~]# unzip -q linuxamd64_12102_database_1of2.zip [root@prmy ~]# unzip -q linuxamd64_12102_database_2of2.zip [root@prmy ~]# unzip -q linuxamd64_12102_grid_1of2.zip [root@prmy ~]# unzip -q linuxamd64_12102_grid_2of2.zip Создаем определение виртуальной машины prmy1:

[root@prmy stage]# mkdir prmy1 [root@prmy stage]# cd prmy1 [root@prmy prmy1]# vi prmy1.xml

<domain type='kvm'> <name>prmy1</name> <memory unit='MiB'>4300</memory> <currentMemory unit='MiB'>4300</currentMemory> <memoryBacking> <hugepages/> </memoryBacking> <vcpu placement='static'>1</vcpu> <os> <type arch='x86_64' machine='pc-i440fx-2.3'>hvm</type> </os> <features> <acpi/> <apic/> <pae/> </features> <cpu mode='host-model'> <model fallback='allow'/> </cpu> <clock offset='utc'> <timer name='rtc' tickpolicy='catchup'/> <timer name='pit' tickpolicy='delay'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <pm> <suspend-to-mem enabled='no'/> <suspend-to-disk enabled='no'/> </pm> <devices> <emulator>/usr/bin/qemu-kvm</emulator> <disk type='file' device='disk'> <driver name='qemu' type='qcow2'/> <source file='/var/lib/libvirt/images/prmy1/system.qcow2'/> <target dev='vda' bus='virtio'/> <boot order='1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/> </disk> <disk type='file' device='disk'> <driver name='qemu' type='qcow2'/> <source file='/var/lib/libvirt/images/prmy1/u01.qcow2'/> <target dev='vdb' bus='virtio'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/> </disk> <disk type='file' device='disk'> <driver name='qemu' type='raw'/> <source file='/var/lib/libvirt/images/df.img'/> <target dev='vdc' bus='virtio'/> <shareable/> <address type='pci' domain='0x0000' bus='0x00' slot='0x08' function='0x0'/> </disk> <disk type='file' device='disk'> <driver name='qemu' type='raw'/> <source file='/var/lib/libvirt/images/dg.img'/> <target dev='vdd' bus='virtio'/> <shareable/> <address type='pci' domain='0x0000' bus='0x00' slot='0x09' function='0x0'/> </disk> <disk type='file' device='cdrom'> <driver name='qemu' type='raw'/> <source file='/var/lib/libvirt/images/V52218-01.iso'/> <target dev='hda' bus='ide'/> <readonly/> <boot order='2'/> <address type='drive' controller='0' bus='0' target='0' unit='0'/> </disk> <controller type='usb' index='0' model='ich9-ehci1'> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x7'/> </controller> <controller type='usb' index='0' model='ich9-uhci1'> <master startport='0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0' multifunction='on'/> </controller> <controller type='usb' index='0' model='ich9-uhci2'> <master startport='2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x1'/> </controller> <controller type='usb' index='0' model='ich9-uhci3'> <master startport='4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x2'/> </controller> <controller type='pci' index='0' model='pci-root'/> <controller type='virtio-serial' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/> </controller> <controller type='ide' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/> </controller> <interface type='network'> <mac address='00:16:3e:00:01:01'/> <source network='public' portgroup='public'/> <model type='virtio'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </interface> <interface type='network'> <mac address='00:16:3e:00:01:11'/> <source network='interconnect1' portgroup='interconnect1'/> <model type='virtio'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x0b' function='0x0'/> </interface> <interface type='network'> <mac address='00:16:3e:00:01:21'/> <source network='interconnect2' portgroup='interconnect2'/> <model type='virtio'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x0c' function='0x0'/> </interface> <interface type='network'> <mac address='00:16:3e:00:01:31'/> <source network='dataguard' portgroup='dataguard'/> <model type='virtio'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x0d' function='0x0'/> </interface> <serial type='pty'> <target port='0'/> </serial> <console type='pty'> <target type='serial' port='0'/> </console> <channel type='unix'> <source mode='bind' path='/var/lib/libvirt/qemu/channel/target/prmy1.org.qemu.guest_agent.0'/> <target type='virtio' name='org.qemu.guest_agent.0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <channel type='spicevmc'> <target type='virtio' name='com.redhat.spice.0'/> <address type='virtio-serial' controller='0' bus='0' port='2'/> </channel> <input type='tablet' bus='usb'/> <input type='mouse' bus='ps2'/> <input type='keyboard' bus='ps2'/> <graphics type='spice' autoport='yes'/> <video> <model type='qxl' ram='65536' vram='65536' vgamem='16384' heads='1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </video> <memballoon model='virtio'> <address type='pci' domain='0x0000' bus='0x00' slot='0x0a' function='0x0'/> </memballoon> </devices> </domain>

Создаем виртуалку:

[root@prmy prmy1]# virsh define prmy1.xml Запускаем Virtual Machine Manager и инсталлируем операционную систему любым удобным для вас способом. Сетевые интерфейсы должны получить свои IP адреса с нашего DHCP. 200MB отдаем для /boot, 8GB для swap и остальное для /. В качестве файловой системы для / и /boot используем, например ext3.

В состав пакетов для установки включим группы пакетов:

@core @base @x11 @basic-desktop @general-desktop @guest-agents а также пакеты:

oracleasm-support oracle-rdbms-server-12cR1-preinstall nscd ntp

Конфигурирование Oracle Linux 6

Подключаемся к созданной виртуалке и конфигурируем ее:

[root@prmy ~]# ssh -X root@prmy1.example.com Избавляемся от GNOME:

[root@prmy1 ~]# vi /etc/inittab id:3:initdefault: Избавляемся от вопросов при удалении файла пользователем root:

[root@prmy1 ~]# sed -i -e 's/alias/#&/gi' /root/.bashrc Избавляемся от запуска ненужных сервисов типа cups и прочих командой chkconfig:

[root@prmy1 ~]# chkconfig cups off … Сервисы ntpd и nscd наоборот включаем:

[root@prmy1 ~]# chkconfig ntpd on [root@prmy1 ~]# chkconfig nscd on У меня после перезагрузки получилось следующее:

[root@prmy1 ~]# chkconfig --list | grep :on acpid 0:off 1:off 2:on 3:on 4:on 5:on 6:off atd 0:off 1:off 2:off 3:on 4:on 5:on 6:off auditd 0:off 1:off 2:on 3:on 4:on 5:on 6:off blk-availability 0:off 1:on 2:on 3:on 4:on 5:on 6:off cpuspeed 0:off 1:on 2:on 3:on 4:on 5:on 6:off crond 0:off 1:off 2:on 3:on 4:on 5:on 6:off haldaemon 0:off 1:off 2:off 3:on 4:on 5:on 6:off irqbalance 0:off 1:off 2:off 3:on 4:on 5:on 6:off mcelogd 0:off 1:off 2:off 3:on 4:off 5:on 6:off messagebus 0:off 1:off 2:on 3:on 4:on 5:on 6:off netfs 0:off 1:off 2:off 3:on 4:on 5:on 6:off network 0:off 1:off 2:on 3:on 4:on 5:on 6:off nfslock 0:off 1:off 2:off 3:on 4:on 5:on 6:off nscd 0:off 1:off 2:on 3:on 4:on 5:on 6:off ntpd 0:off 1:off 2:on 3:on 4:on 5:on 6:off oracle-rdbms-server-12cR1-preinstall-firstboot 0:off 1:off 2:on 3:on 4:on 5:on 6:off oracleasm 0:off 1:off 2:on 3:on 4:on 5:on 6:off portreserve 0:off 1:off 2:on 3:on 4:on 5:on 6:off qemu-ga 0:off 1:off 2:on 3:on 4:on 5:on 6:off rpcbind 0:off 1:off 2:on 3:on 4:on 5:on 6:off rpcgssd 0:off 1:off 2:off 3:on 4:on 5:on 6:off rsyslog 0:off 1:off 2:on 3:on 4:on 5:on 6:off spice-vdagentd 0:off 1:off 2:off 3:off 4:off 5:on 6:off sshd 0:off 1:off 2:on 3:on 4:on 5:on 6:off sysstat 0:off 1:on 2:on 3:on 4:on 5:on 6:off udev-post 0:off 1:on 2:on 3:on 4:on 5:on 6:off

Конфигурируем ntpd:

[root@prmy1 ~]# vi /etc/sysconfig/ntpd # Drop root to id 'ntp:ntp' by default. OPTIONS="-x -u ntp:ntp -p /var/run/ntpd.pid -g" # Set to 'yes' to sync hw clock after successful ntpdate SYNC_HWCLOCK=no # Additional options for ntpdate NTPDATE_OPTIONS=""

[root@prmy1 ~]# vi /etc/ntp.conf

# For more information about this file, see the man pages # ntp.conf(5), ntp_acc(5), ntp_auth(5), ntp_clock(5), ntp_misc(5), ntp_mon(5). driftfile /var/lib/ntp/drift # Permit time synchronization with our time source, but do not # permit the source to query or modify the service on this system. restrict default kod nomodify notrap nopeer noquery restrict -6 default kod nomodify notrap nopeer noquery # Permit all access over the loopback interface. This could # be tightened as well, but to do so would effect some of # the administrative functions. restrict 127.0.0.1 restrict -6 ::1 # Hosts on local network are less restricted. #restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap # Use public servers from the pool.ntp.org project. # Please consider joining the pool (http://www.pool.ntp.org/join.html). server 192.168.100.1 server 127.127.1.0 # local clock fudge 127.127.1.0 stratum 10 broadcastdelay 0.008 # Key file containing the keys and key identifiers used when operating # with symmetric key cryptography. keys /etc/ntp/keys

Конфигурируем лимиты:

[root@prmy1 ~]# vi /etc/security/limits.conf

oracle soft nofile 131072 oracle hard nofile 131072 oracle soft nproc 131072 oracle hard nproc 131072 oracle soft core unlimited oracle hard core unlimited oracle soft memlock 3828161 oracle hard memlock 3828161 grid soft nofile 131072 grid hard nofile 131072 grid soft nproc 131072 grid hard nproc 131072 grid soft core unlimited grid hard core unlimited grid soft memlock 3828161 grid hard memlock 3828161 # Recommended stack hard limit 32MB for oracle installations # oracle hard stack 32768

[root@prmy1 ~]# cd /etc/profile.d [root@prmy1 profile.d]# vi oracle.sh if [ $USER = "oracle" ] || [ $USER = "grid" ]; then if [ /bin/bash = "/bin/ksh" ]; then ulimit -p 16384 ulimit -n 65536 else ulimit -u 16384 -n 65536 fi umask 022 fi

[root@prmy1 profile.d]# ln -s oracle.sh oracle.csh

[root@prmy1 ~]# sed -i -r -e 's/(tmpfs.*)(defaults)/\1size=3800M,rw,exec/gi' /etc/fstab eth0,eth3 — public eth1, eth2 — interconnect

[root@prmy1 ~]# echo "net.ipv4.conf.eth0.rp_filter = 1" >> /etc/sysctl.conf [root@prmy1 ~]# echo "net.ipv4.conf.eth3.rp_filter = 1" >> /etc/sysctl.conf [root@prmy1 ~]# echo "net.ipv4.conf.eth1.rp_filter = 2" >> /etc/sysctl.conf [root@prmy1 ~]# echo "net.ipv4.conf.eth2.rp_filter = 2" >> /etc/sysctl.conf Создаем группы для Oracle:

[root@prmy1 ~]# groupadd oper [root@prmy1 ~]# groupadd asmdba [root@prmy1 ~]# groupadd asmoper [root@prmy1 ~]# groupadd asmadmin Включаем пользователя oracle в группы dba, oper и asmdba

[root@prmy1 ~]# usermod -G dba,oper,asmdba oracle [root@prmy1 ~]# passwd oracle Создаем пользователя grid:

[root@prmy1 ~]# useradd -m -g oinstall -G asmdba,asmoper,asmadmin grid [root@prmy1 ~]# passwd grid Для удобства будем отображать имя экземпляра Oracle в подсказке bash:

[root@prmy1 ~]# echo 'export PS1="[\u@\h-\`echo \$ORACLE_SID\` \W]$ "' >> /home/oracle/.bashrc [root@prmy1 ~]# echo 'export PS1="[\u@\h-\`echo \$ORACLE_SID\` \W]$ "' >> /home/grid/.bashrc [root@prmy1 ~]# echo 'export PS1="[\u@\h-\`echo \$ORACLE_SID\` \W]# "' >> /root/.bashrc Создаем раздел и файловую систему на диске предназначенном для установки софта Oracle.

Должно получиться так:

[root@sby1- ~]# fdisk -u -c -l /dev/vdb Disk /dev/vdb: 17.2 GB, 17179869184 bytes 3 heads, 2 sectors/track, 5592405 cylinders, total 33554432 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xf12e0427 Device Boot Start End Blocks Id System /dev/vdb1 2048 33554431 16776192 83 Linux

[root@prmy1 ~]# mkfs.ext3 -L u01 /dev/vdb1 Монтировать разделы я хочу не по uuid, а по LABEL:

[root@prmy1 ~]# tune2fs -L boot /dev/vda1 [root@prmy1 ~]# tune2fs -L root /dev/vda3 [root@prmy1 ~]# swapoff [root@prmy1 ~]# mkswap -L swap /dev/vda2 [root@prmy1 ~]# vi /etc/fstab LABEL=root / ext3 defaults 1 1 LABEL=boot /boot ext3 defaults 1 2 LABEL=swap swap swap defaults 0 0 tmpfs /dev/shm tmpfs defaults 0 0 devpts /dev/pts devpts gid=5,mode=620 0 0 sysfs /sys sysfs defaults 0 0 proc /proc proc defaults 0 0 LABEL=u01 /u01 ext3 defaults 0 0

[root@prmy1 ~]# mount -a [root@prmy1 ~]# mkdir -p /u01/app/12.1.0/grid [root@prmy1 ~]# mkdir -p /u01/app/grid [root@prmy1 ~]# mkdir -p /u01/app/oracle [root@prmy1 ~]# chown -R grid:oinstall /u01 [root@prmy1 ~]# chown oracle:oinstall /u01/app/oracle [root@prmy1 ~]# chmod -R 775 /u01/ Нарезаем разделы для ASM дисков, у меня получилось в итоге так:

[root@sby1- ~]# fdisk -l -u -c /dev/vdc Disk /dev/vdc: 35.4 GB, 35433480192 bytes 16 heads, 63 sectors/track, 68656 cylinders, total 69206016 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x8cee7a8e Device Boot Start End Blocks Id System /dev/vdc1 2048 5656927 2827440 83 Linux /dev/vdc2 5656928 11311807 2827440 83 Linux /dev/vdc3 11311808 16966687 2827440 83 Linux /dev/vdc4 16966688 69206015 26119664 5 Extended /dev/vdc5 16968736 22623615 2827440 83 Linux /dev/vdc6 22625664 28280543 2827440 83 Linux /dev/vdc7 28282592 33937471 2827440 83 Linux /dev/vdc8 33939520 39594399 2827440 83 Linux /dev/vdc9 39596448 45251327 2827440 83 Linux /dev/vdc10 45253376 50908255 2827440 83 Linux /dev/vdc11 50910304 56565183 2827440 83 Linux /dev/vdc12 56567232 62222111 2827440 83 Linux /dev/vdc13 62224160 67879039 2827440 83 Linux

[root@sby1- ~]# fdisk -u -c -l /dev/vdd Disk /dev/vdd: 11.8 GB, 11811160064 bytes 1 heads, 32 sectors/track, 720896 cylinders, total 23068672 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x3416c855 Device Boot Start End Blocks Id System /dev/vdd1 2048 5656927 2827440 83 Linux /dev/vdd2 5656928 11311807 2827440 83 Linux /dev/vdd3 11311808 16966687 2827440 83 Linux /dev/vdd4 16966688 22621567 2827440 83 Linux С сайта Oracle скачиваем пакет oracleasmlib-2.0.4-1.el6.x86_64 помещаем на prmy в /stage и устанавливаем его:

[root@prmy1 ~]# mount prmy:/stage /stage [root@prmy1 ~]# rpm -Uvh oracleasmlib-2.0.4-1.el6.x86_64 [root@prmy1 ~]# rpm -Uvh /stage/grid/rpm/cvuqdisk-1.0.9-1.rpm [root@prmy1 ~]# umount /stage Конфигурируем oracleasm:

[root@prmy1 ~]# /usr/sbin/oracleasm configure -e -u grid -g asmadmin -s y Создаем ASM диски:

[root@prmy1 ~]# oracleasm createdisk ASMDISK1P1 /dev/vdc1 [root@prmy1 ~]# oracleasm createdisk ASMDISK1P2 /dev/vdc2 [root@prmy1 ~]# oracleasm createdisk ASMDISK1P3 /dev/vdc3 [root@prmy1 ~]# oracleasm createdisk ASMDISK1P4 /dev/vdc5 [root@prmy1 ~]# oracleasm createdisk ASMDISK1P5 /dev/vdc6 [root@prmy1 ~]# oracleasm createdisk ASMDISK1P6 /dev/vdc7 [root@prmy1 ~]# oracleasm createdisk ASMDISK1P7 /dev/vdc8 [root@prmy1 ~]# oracleasm createdisk ASMDISK1P8 /dev/vdc9 [root@prmy1 ~]# oracleasm createdisk ASMDISK1P9 /dev/vdc10 [root@prmy1 ~]# oracleasm createdisk ASMDISK1P10 /dev/vdc11 [root@prmy1 ~]# oracleasm createdisk ASMDISK1P11 /dev/vdc12 [root@prmy1 ~]# oracleasm createdisk ASMDISK1P12 /dev/vdc13 [root@prmy1 ~]# oracleasm createdisk ASMDISK2P1 /dev/vdd1 [root@prmy1 ~]# oracleasm createdisk ASMDISK2P2 /dev/vdd2 [root@prmy1 ~]# oracleasm createdisk ASMDISK2P3 /dev/vdd3 [root@prmy1 ~]# oracleasm createdisk ASMDISK2P4 /dev/vdd4 На этом конфигурирование виртуалки можно считать законченным, теперь выключаем ее приступаем к ее клонированию. Но перед этим для облегчения клонирования скопируем с нее файлы, которые будут разными в каждой виртуалке.

[root@prmy1 ~]# ssh prmy mkdir /stage/prmy1 [root@prmy1 ~]# scp /etc/sysconfig/network prmy:/stage/prmy1 [root@prmy1 ~]# scp /etc/sysconfig/network/scripts/ifcfg-eth* prmy:/stage/prmy1 [root@prmy1 ~]# scp /etc/udev/rules.d/70-persistent-net.rules prmy:/stage/prmy1 [root@prmy1 ~]# poweroff

Клонирование виртуальных машин

Копируем общие диски на sby:

[root@prmy ~]# scp /var/lib/libvirt/images/*.img sby:/var/lib/libvirt/images Создаем каталоги для виртуалок:

[root@prmy ~]# mkdir /var/lib/libvirt/images/prmy2 [root@prmy ~]# mkdir /var/lib/libvirt/images/prmy3 [root@prmy ~]# ssh sby mkdir /var/lib/libvirt/images/sby1 [root@prmy ~]# ssh sby mkdir /var/lib/libvirt/images/sby2 [root@prmy ~]# ssh sby mkdir /var/lib/libvirt/images/sby3 Копируем наши «эталонные» образы system.qcow и u01.qcow2 на sby.

[root@prmy ~]# scp /var/lib/libvirt/images/prmy1/system.qcow sby:/var/lib/libvirt/images [root@prmy ~]# scp /var/lib/libvirt/images/prmy1/ u01.qcow2 sby:/var/lib/libvirt/images/sby1 [root@prmy ~]# scp /var/lib/libvirt/images/prmy1/ u01.qcow2 sby:/var/lib/libvirt/images/sby2 [root@prmy ~]# scp /var/lib/libvirt/images/prmy1/ u01.qcow2 sby:/var/lib/libvirt/images/sby3 Клонируем виртуальный диск system.qcow2 на prmy:

[root@prmy ~]# mv /var/lib/libvirt/images/prmy1/system.qcow2 /var/lib/libvirt/images [root@prmy ~]# qemu-img create -f qcow2 -o backing_file=/var/lib/libvirt/images/system.qcow2 /var/lib/libvirt/images/prmy1/system.qcow2 [root@prmy ~]# qemu-img create -f qcow2 -o backing_file=/var/lib/libvirt/images/system.qcow2 /var/lib/libvirt/images/prmy2/system.qcow2 [root@prmy ~]# qemu-img create -f qcow2 -o backing_file=/var/lib/libvirt/images/system.qcow2 /var/lib/libvirt/images/prmy3/system.qcow2 Диск u01.qcow2 просто копируем:

[root@prmy ~]# cp /var/lib/libvirt/images/prmy1/u01.qcow2 /var/lib/libvirt/images/prmy2/u01.qcow2 [root@prmy ~]# cp /var/lib/libvirt/images/prmy1/u01.qcow2 /var/lib/libvirt/images/prmy3/u01.qcow2 Готовим файлы на замену в виртуалках:

[root@prmy ~]# mkdir /stage/{prmy2, prmy3} [root@prmy ~]# cd /stage/prmy1 [root@prmy prmy1]# cp ifcfg-eth* network 70-persistent-net.rules ../prmy2 [root@prmy prmy1]# cp ifcfg-eth* network 70-persistent-net.rules ../prmy3 [root@prmy prmy1]# scp ifcfg-eth* network 70-persistent-net.rules sby:/stage/sby1 [root@prmy prmy1]# scp ifcfg-eth* network 70-persistent-net.rules sby:/stage/sby2 [root@prmy prmy1]# scp ifcfg-eth* network 70-persistent-net.rules sby:/stage/sby3 Туда же копируем определение виртуальной машины prmy1:

[root@prmy prmy1]# cp prmy1.xml /stage/prmy2/prmy2.xml [root@prmy prmy1]# cp prmy1.xml /stage/prmy3/prmy3.xml [root@prmy prmy1]# scp prmy1.xml sby:/stage/sby1/sby1.xml [root@prmy prmy1]# scp prmy1.xml sby:/stage/sby2/sby3.xml [root@prmy prmy1]# scp prmy1.xml sby:/stage/sby3/sby3.xml [root@prmy prmy1]# cd ../prmy2 Редактируем файлы:

[root@prmy prmy2]# sed -i -r -e "s/prmy1/prmy2/gi" network [root@prmy prmy2]# sed -i -r -e 's/(00:16:3E:00:0)(.)(:.)(1)/\11\32/gi' ifcfg-eth* 70-persistent-net.rules Копируем их в образ виртуальной машины prmy2:

[root@prmy prmy2]# guestfish << EOF add /var/lib/libvirt/images/prmy2/system.qcow2 run mount /dev/sda3 / copy-in network /etc/sysconfig copy-in ifcfg-eth0 /etc/sysconfig/network-scripts copy-in ifcfg-eth1 /etc/sysconfig/network-scripts copy-in ifcfg-eth2 /etc/sysconfig/network-scripts copy-in ifcfg-eth3 /etc/sysconfig/network-scripts copy-in 70-persistent-net.rules /etc/udev/rules.d umount / exit EOF Редактируем определение виртуалной машины prmy2.

меняем имена:

[root@prmy prmy2]# sed -i -r -e "s/prmy1/prmy2/gi" prmy2.xml меняем mac адреса:

[root@prmy prmy2]# sed -i -r -e 's/(00:16:3E:00:0)(.)(:.)(1)/\11\32/gi' prmy2.xml удаляем CDROM:

[root@prmy prmy2]# sed -i "/<disk.*cdrom/,/<\/disk>/ {; d; }" prmy2.xml удаляем IDE контроллер:

[root@prmy prmy2]# sed -i "/<controller.*ide/,/<\/controller>/ {; d; }" prmy2.xml И создаем виртуальную машину prmy2:

[root@prmy prmy2]# virsh define prmy2.xml Продолжаем в том же духе с prmy3.

Имена меняем командой:

[root@prmy prmy3]# sed -i -r -e "s/prmy1/prmy3/gi" network prmy3.xml Mac адреса соответственно:

[root@prmy prmy3]# sed -i -r -e 's/(00:16:3E:00:0)(.)(:.)(1)/\11\33/gi' ifcfg-eth* 70-persistent-net.rules prmy3.xml Запускаем виртуальные машины:

[root@prmy ~]# virsh start prmy1 [root@prmy ~]# virsh start prmy2 [root@prmy ~]# virsh start prmy3 Подключаемся к ним проверяем что имя у них правильное, соседок по сети, DNS и NTP сервера они видит, диски на месте:

# hostname # ping # df -h # oracleasm listdisks Похожую процедуру нужно проделать с виртуальными машинами на sby.example.com.

Например имена и Mac адреса в файлах для sby1 меняем так:

[root@sby sby1]# sed -i -r -e "s/prmy1/sby1/gi" network sby1.xml [root@sby sby1]# sed -i -r -e 's/(00:16:3E:00:0)(.)(:.)(1)/\12\31/gi' ifcfg-eth* 70-persistent-net.rules sby1.xml sby2:

[root@sby sby2]# sed -i -r -e "s/prmy1/sby2/gi" network sby2.xml [root@sby sby2]# sed -i -r -e 's/(00:16:3E:00:0)(.)(:.)(1)/\12\32/gi' ifcfg-eth* 70-persistent-net.rules sby2.xml sby3:

[root@sby sby2]# sed -i -r -e "s/prmy1/sby3/gi" network sby3.xml [root@sby sby2]# sed -i -r -e 's/(00:16:3E:00:0)(.)(:.)(1)/\12\33/gi' ifcfg-eth* 70-persistent-net.rules sby3.xml Их тоже стартуем:

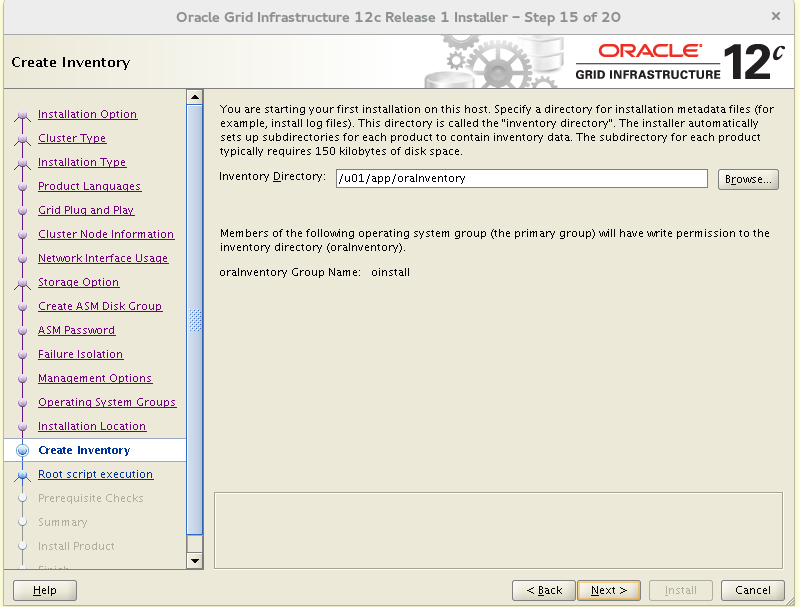

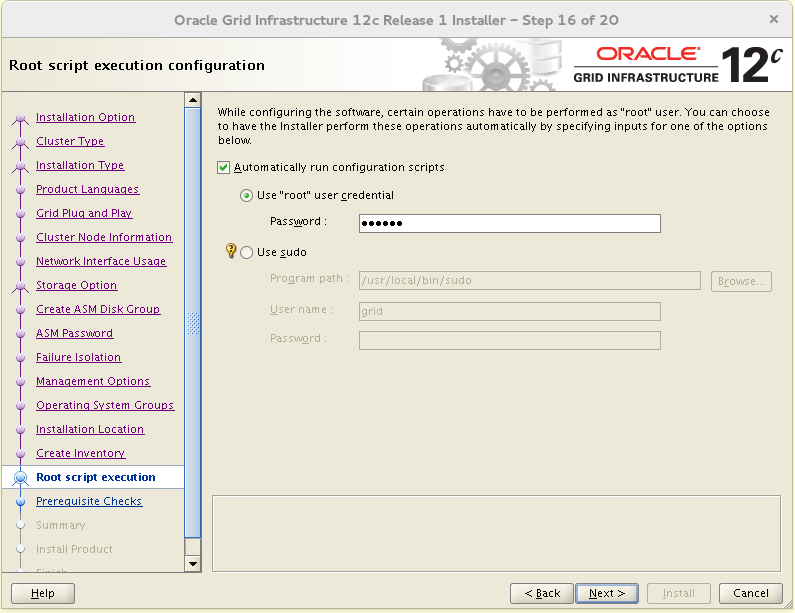

[root@sby ~]# virsh start sby1 [root@sby ~]# virsh start sby2 [root@sby ~]# virsh start sby3 Инсталляция Grid Infrastructure

Процесс довольно длинный и скучный.

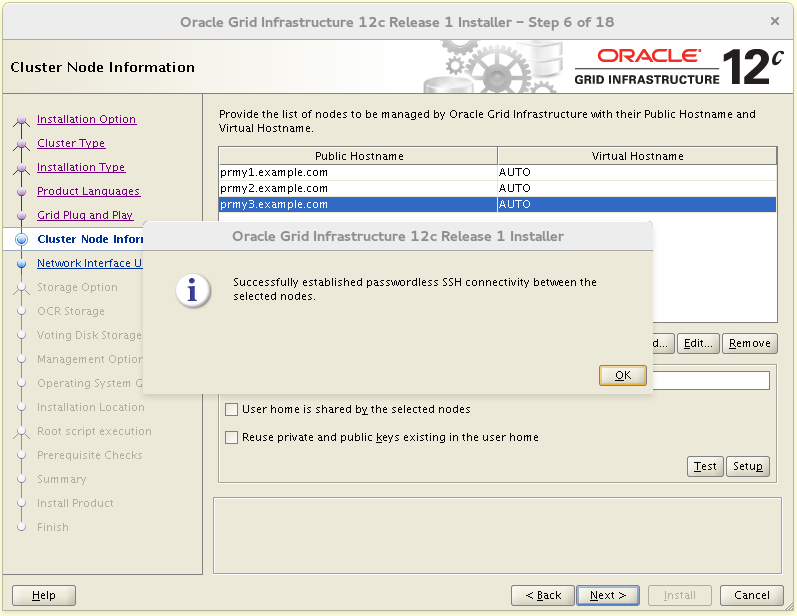

Инсталлируем Grid Infrastructure на prmy:

[root@prmy ~]# ssh -X grid@prmy1 [grid@prmy1- ~]$ su - [root@prmy1 ~]# mount prmy:/stage /stage [root@prmy1 ~]# exit [grid@prmy1- ~]$ /stage/grid/runInstaller

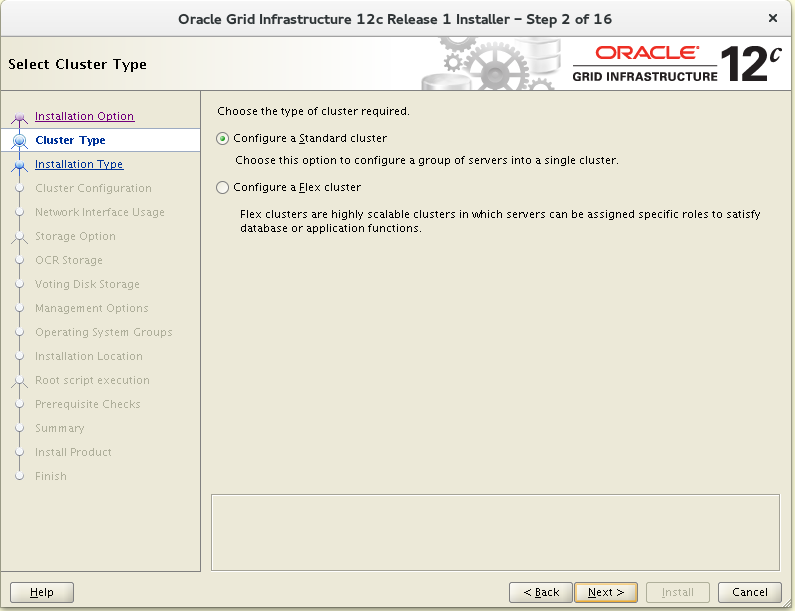

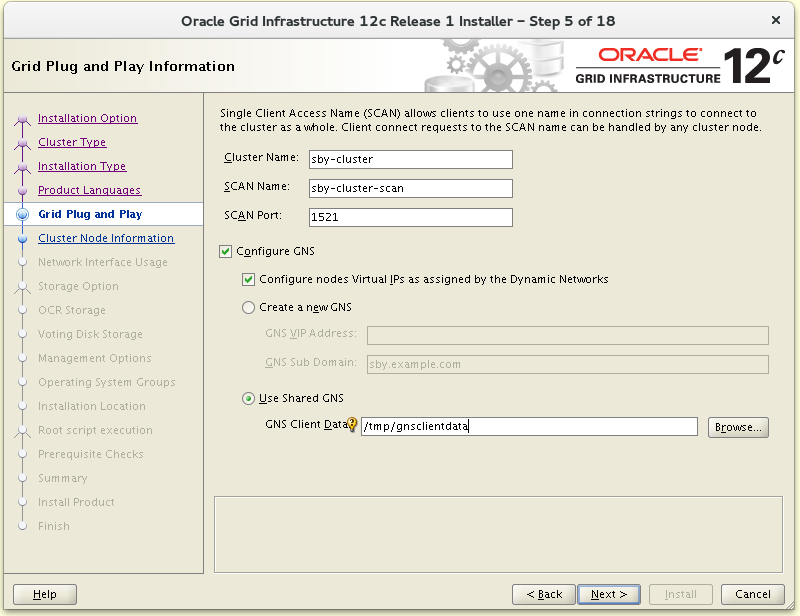

Flex Cluster нам не нужен.

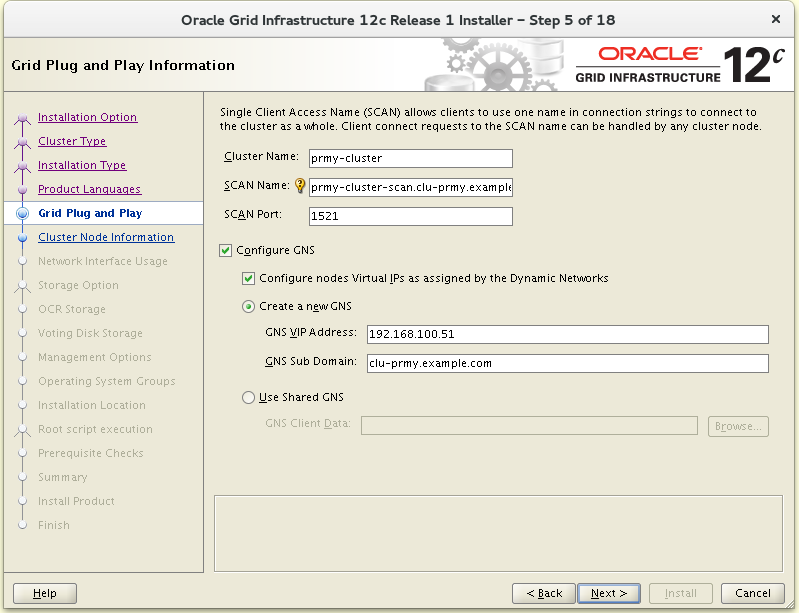

Сконфигурируем GNS на домене, указанном в DNS при делегировании зоны.

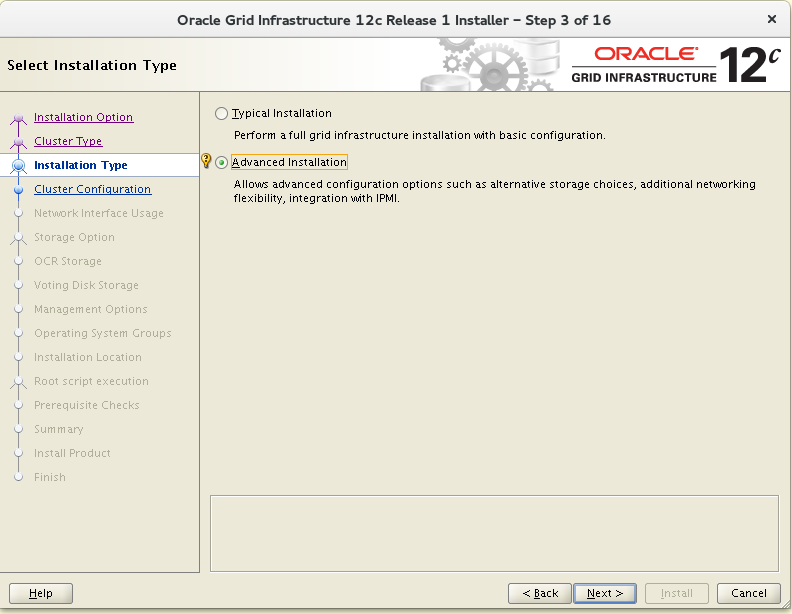

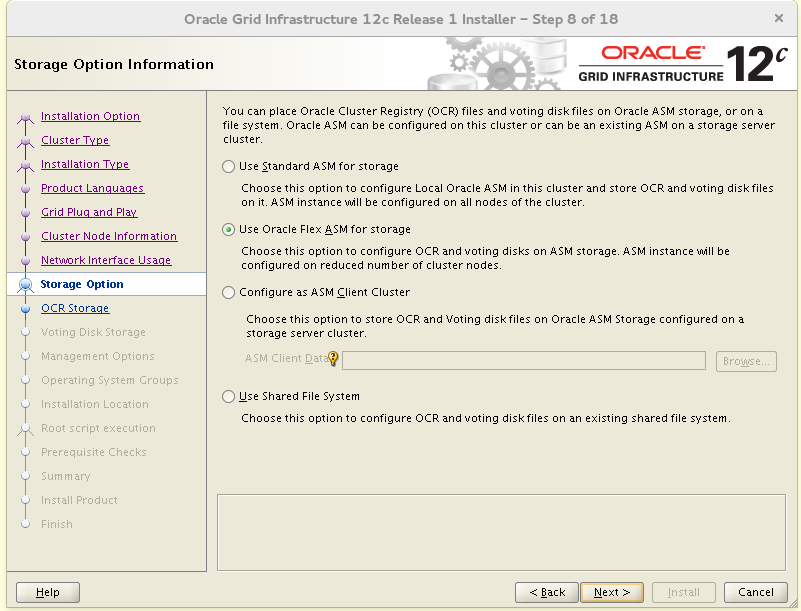

Сконфигурируем Flex ASM, хотя он тут по большому счету не нужен. Впрочем это же стенд для изучения возможностей и можно ограничить количество узлов на которых стартует ASM двумя. При использовании стандартного ASM можно было сэкономить по памяти — его минимальные требования 300M вместо 1G у Flex ASM.

Берем первые 8 дисков

Если сделали вся как у меня, то проверки и инсталляция должна пройти без замечаний.

После окончания инсталляции выгрузим данные GNS для подключения клиента:

[grid@prmy ~]# su - [root@prmy1 ~]# . oraenv ORACLE_SID = [root] ? +ASM1 The Oracle base has been set to /u01/app/grid [root@prmy1 ~]# srvctl export gns -clientdata /tmp/gnsclientdata root@prmy1 ~]# scp /tmp/gnsclientdata sby1:/tmp/gnsclientdata GNS будет в одном экземпляре. Резервный кластер будет использовать GNS основного.

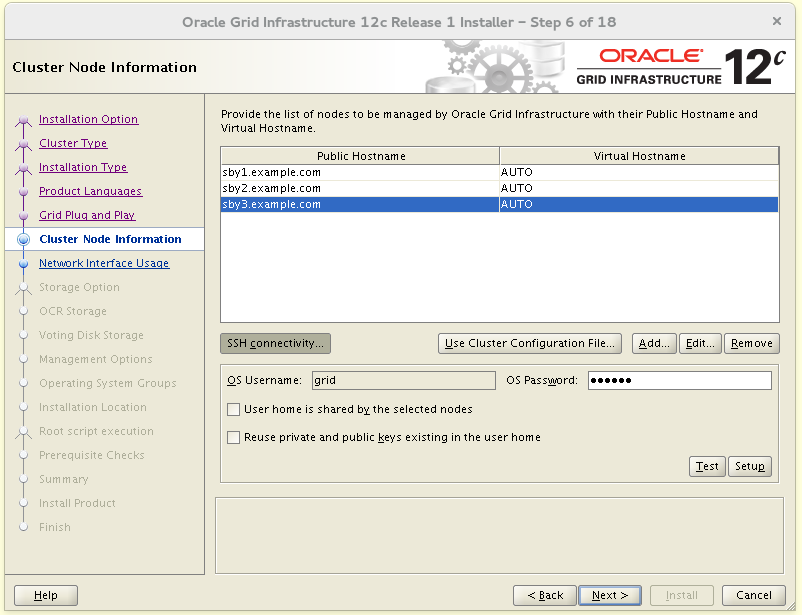

Инсталляция Grid Infrastructure на sby похожа на то что мы уже делали за исключением имен узлов и GNS.

[root@sby ~]# ssh -X grid@sby1 [grid@sby1- ~]$ su - [root@sby1 ~]# mount prmy:/stage /stage [root@sby1 ~]# exit [grid@sby1- ~]$ /stage/grid/runInstaller Если бы мы выбрали инсталляцию Flex Cluster, то не смогли бы использовать GNS соседнего кластера.

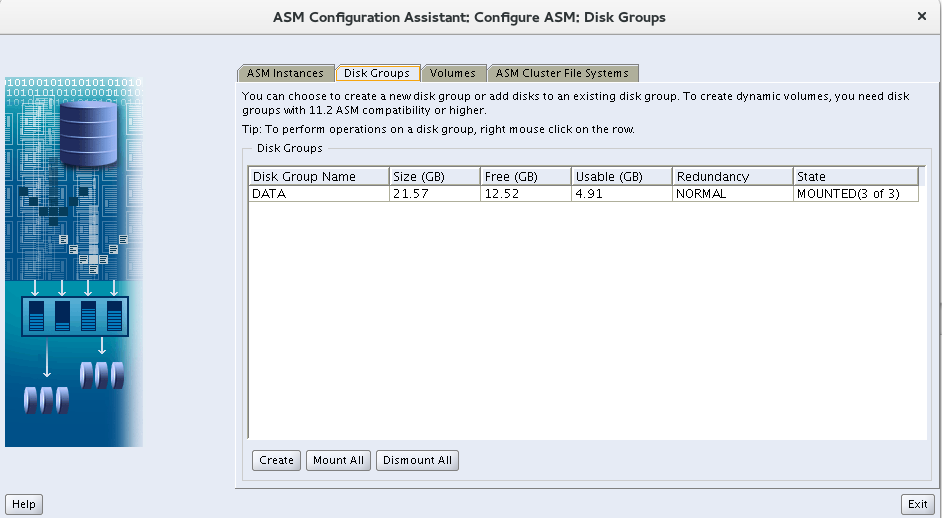

Создание дополнительных дисковых групп ASM

Нам понадобятся дополнительные дисковые группы на обоих кластерах:

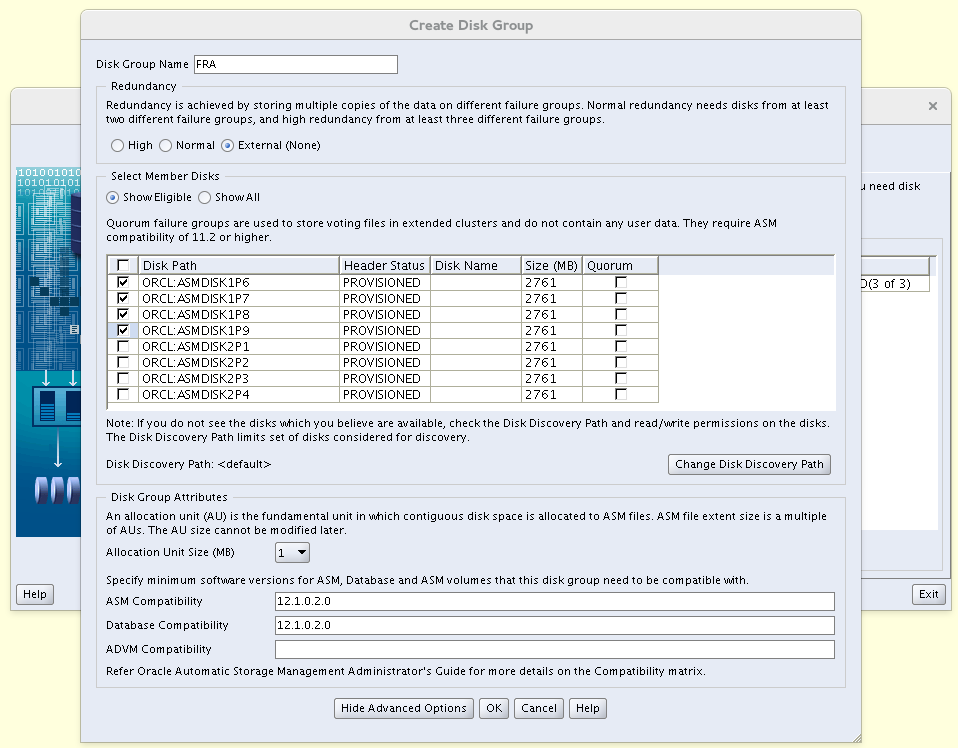

FRA — Fast Recovery Area

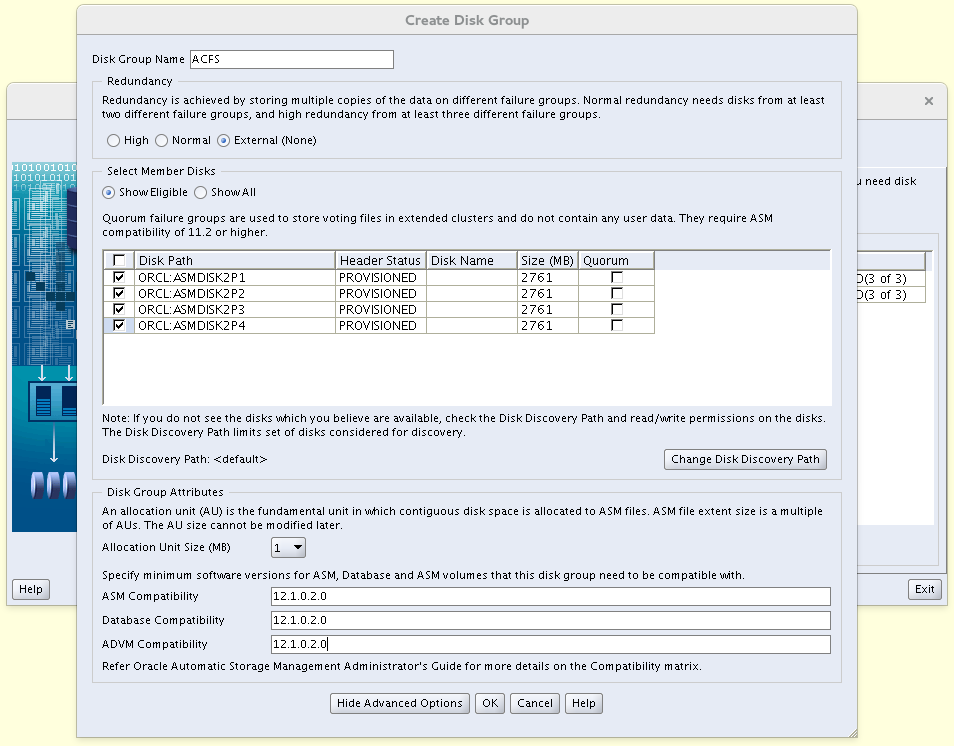

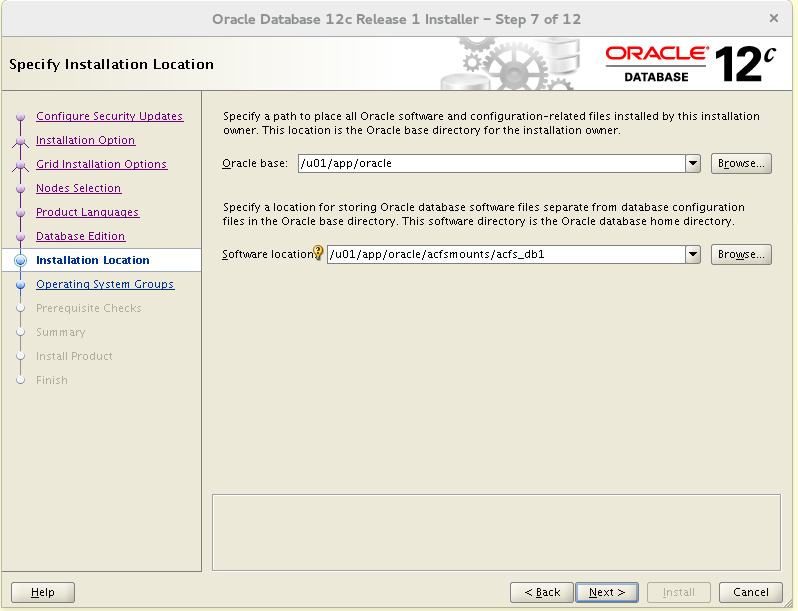

ACFS — для общего Oracle Home Oracle Database.

Под каждую дисковую группу берем по 4 оставшихся диска, redundancy external.

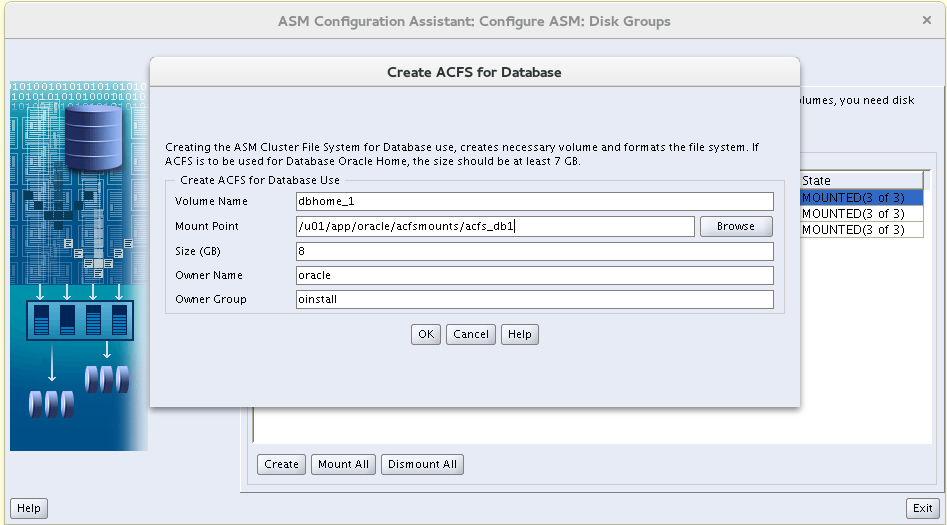

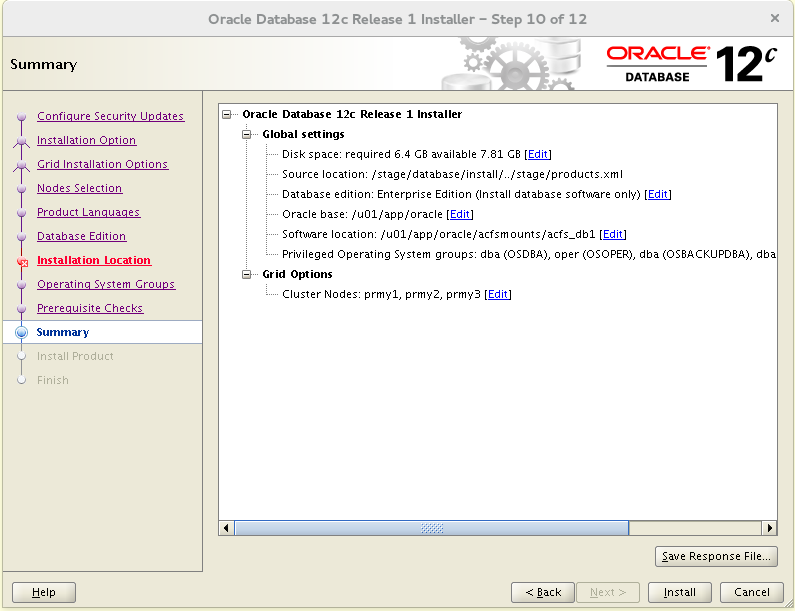

На ACFS создаем том и файловую систему acfs и туда будем инсталлировать программное обеспечение Oracle Database. Проблема непрерывности обслуживания при установке патчей и апгрейах нас не очень волнует — на этом стенде вряд ли будет больше одного пользователя. Зато немного быстрее пройдет инсталляция софта и чуть проще будет конфигурировать Data Duard.

Создаем точку монтирования acfs:

[root@prmy1 ~]# mkdir -p /u01/app/oracle/acfsmounts/acfs_db1 [root@prmy1 ~]# ssh prmy2 mkdir -p /u01/app/oracle/acfsmounts/acfs_db1 [root@prmy1 ~]# ssh prmy3 mkdir -p /u01/app/oracle/acfsmounts/acfs_db1 [root@prmy1 ~]# chown -R oracle:oinstall /u01/app/oracle/acfsmounts [root@prmy1 ~]# ssh prmy2 chown -R oracle:oinstall /u01/app/oracle/acfsmounts [root@prmy1 ~]# ssh prmy3 chown -R oracle:oinstall /u01/app/oracle/acfsmounts

[grid@prmy1-+ASM1 ~]$ asmca

Других версий программного обеспечения у нас не будет, поэтому меняем версию совместимости compatible.rdbms и compatible.asm группы DATA на 12.1.0.2.0.

FRA также создаем с compatible.rdbms и compatible.asm 12.1.0.2.0.

На ACFS дополнительно ставим compatible.advm= 12.1.0.2.0.

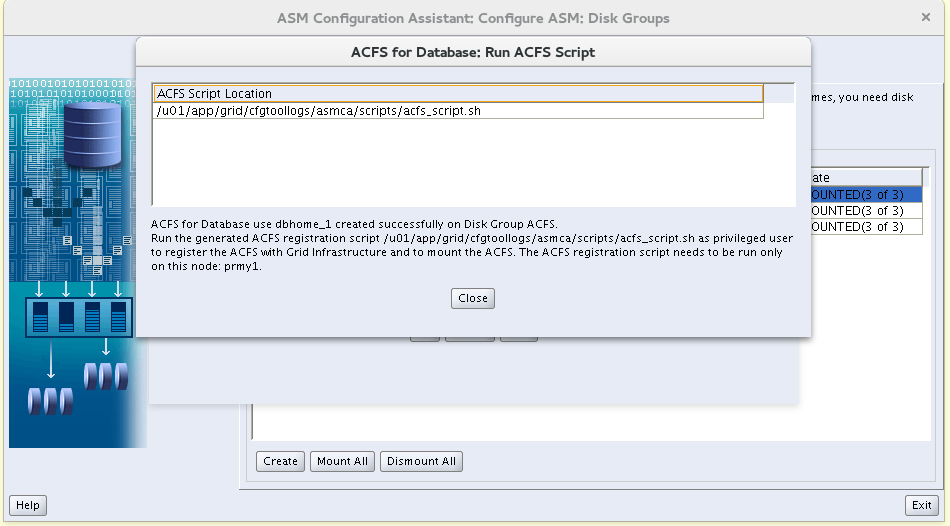

Нас попросят выполнить скрипт от пользователя root:

[root@prmy1 ~]# /u01/app/grid/cfgtoollogs/asmca/scripts/acfs_script.sh ACFS file system /u01/app/oracle/acfsmounts/acfs_db1 is mounted on nodes prmy1,prmy2,prmy3 Аналогично конфигурируем дисковые группы на кластере sby.

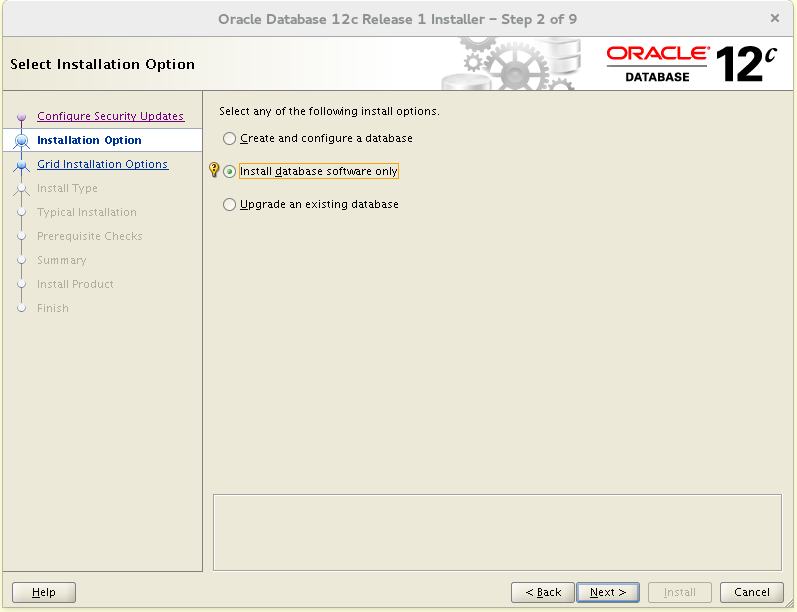

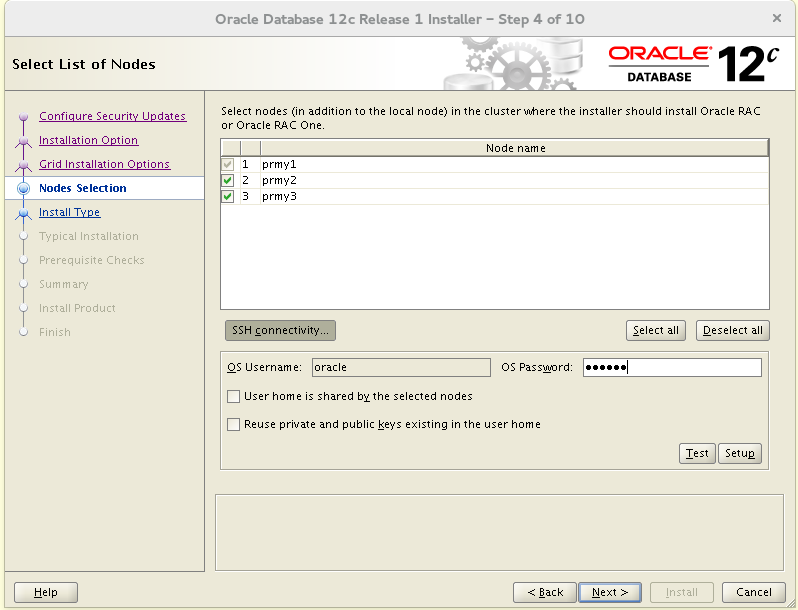

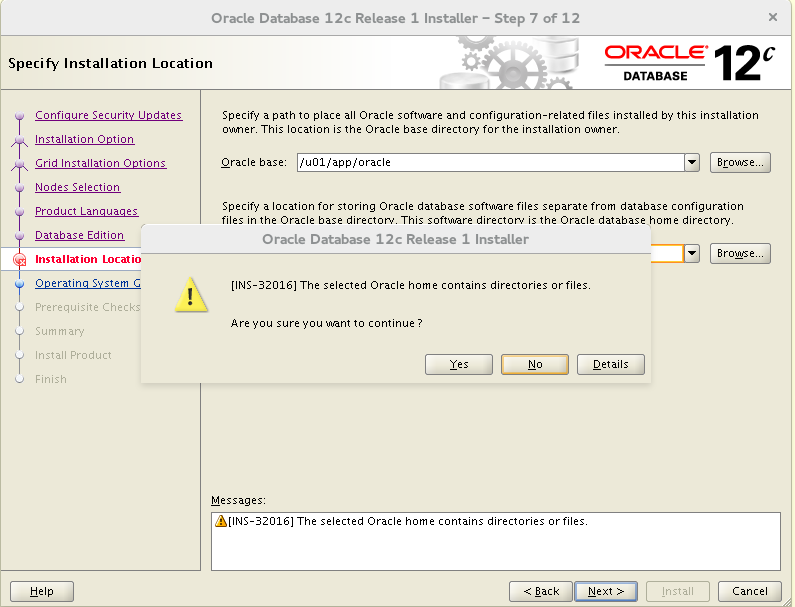

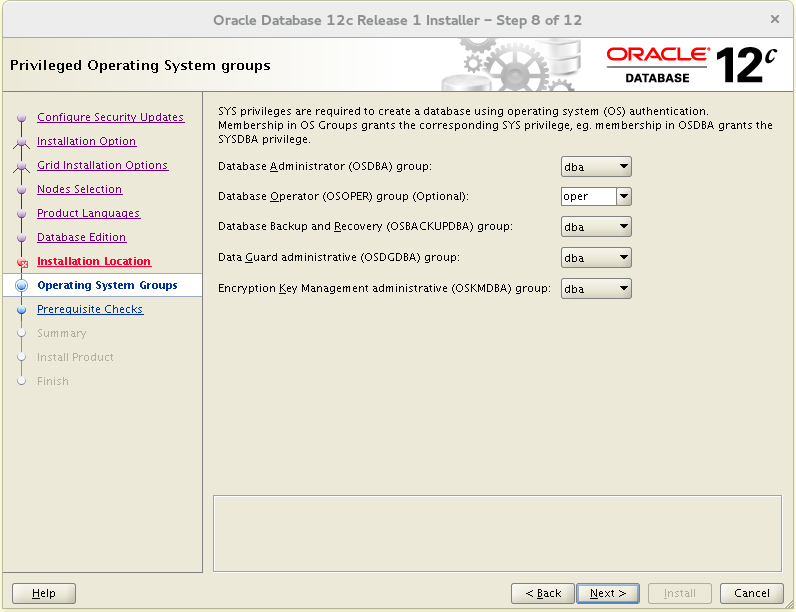

Инсталляция программного обеспечения Oracle Database

Инсталлируем программное обеспечение Oracle Database. Саму базу будем создавать отдельно при помощи dbca. Устанавливаем на все 3 узла кластера.

При проверке он почему-то не видит установленного в нужное ему значения параметра hard memlock=3828161. Создает fixup скрипт, а при его выполнении переустанавливает параметр в то же значение, но все равно на этот параметр обижается.

Короче, смело это игнорируем.

Аналогично устанавливаем софт на кластер sby.

После после завершения инсталляции на sby запускаем следующую команду:

[root@sby1-sby ~]# /u01/app/12.1.0/grid/bin/setasmgidwrap o=/u01/app/oracle/acfsmounts/acfs_db1/bin/oracle Иначе наша stanby БД не получит доступа к файлам на ASM.

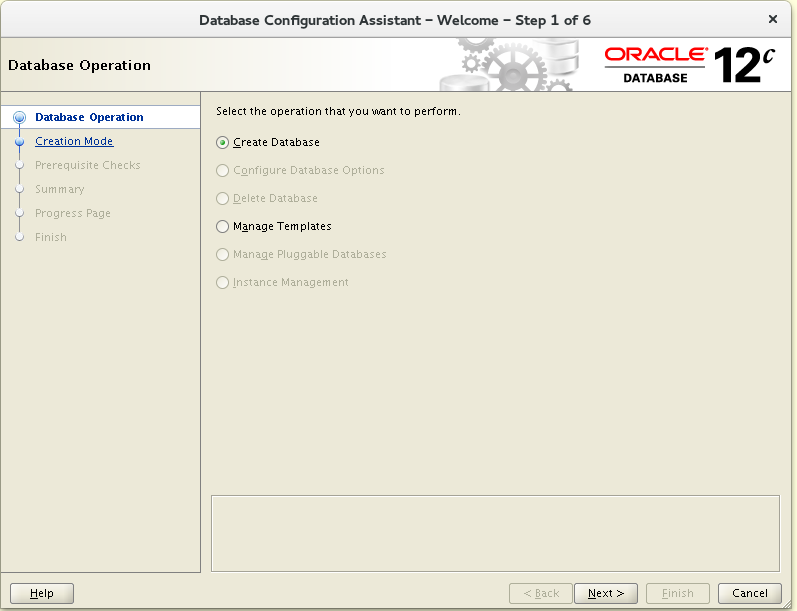

Создание основной кластерной базы данных

Базу данных создать можно и policy или administrator managed. В любом случае для этой БД задействуем только 2 узла кластера. Третий оставим для Far Sync.

Я создавал administrator managed базу данных:

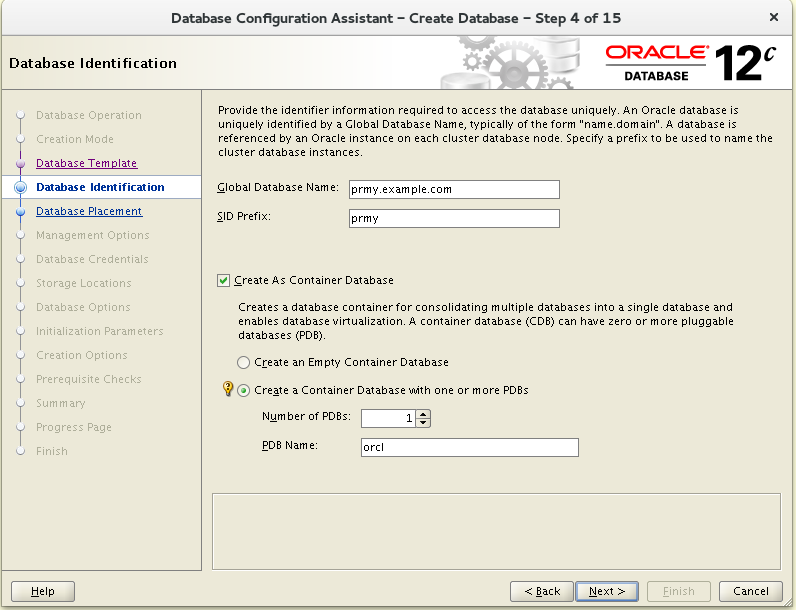

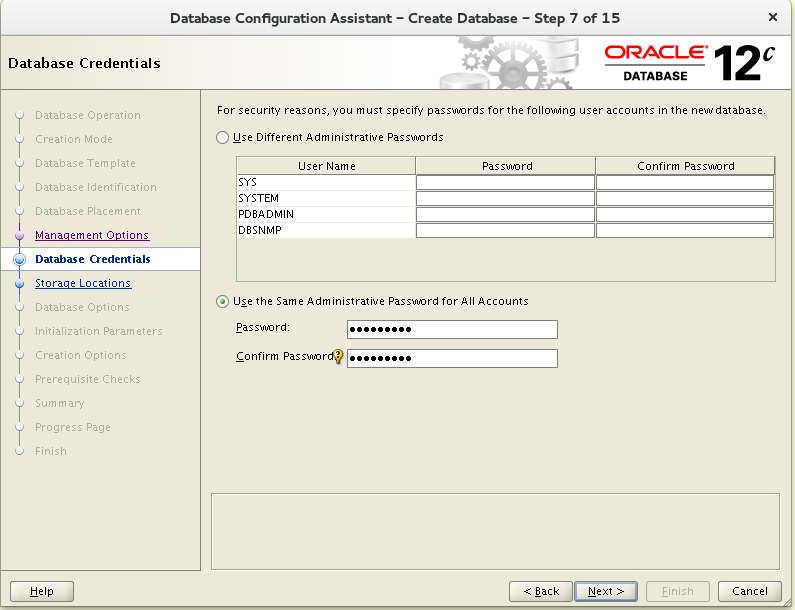

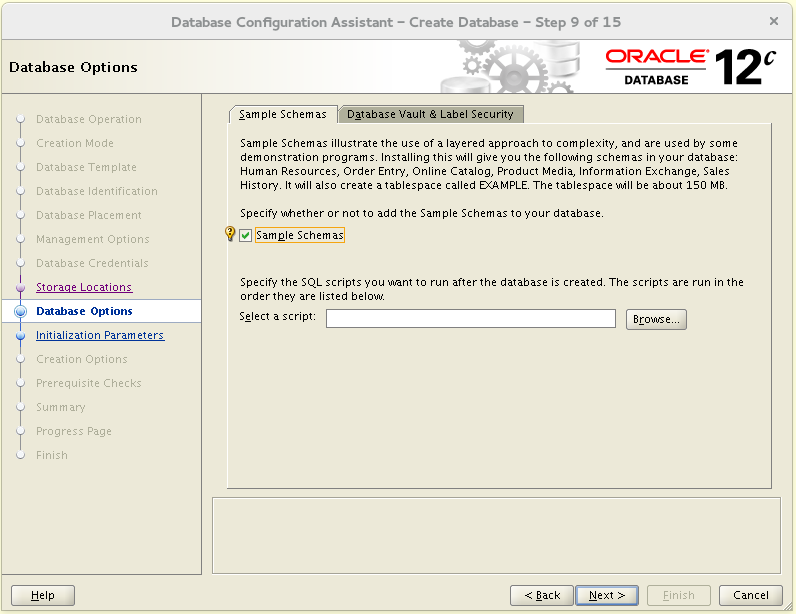

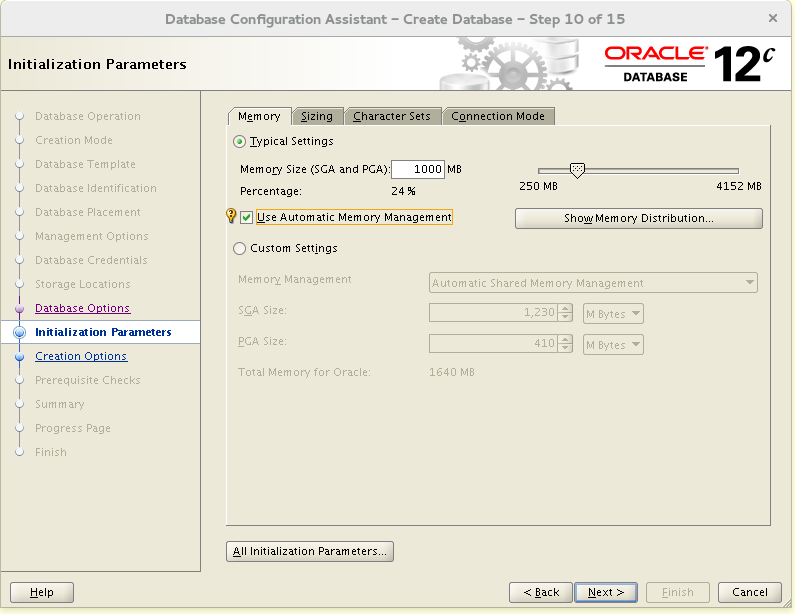

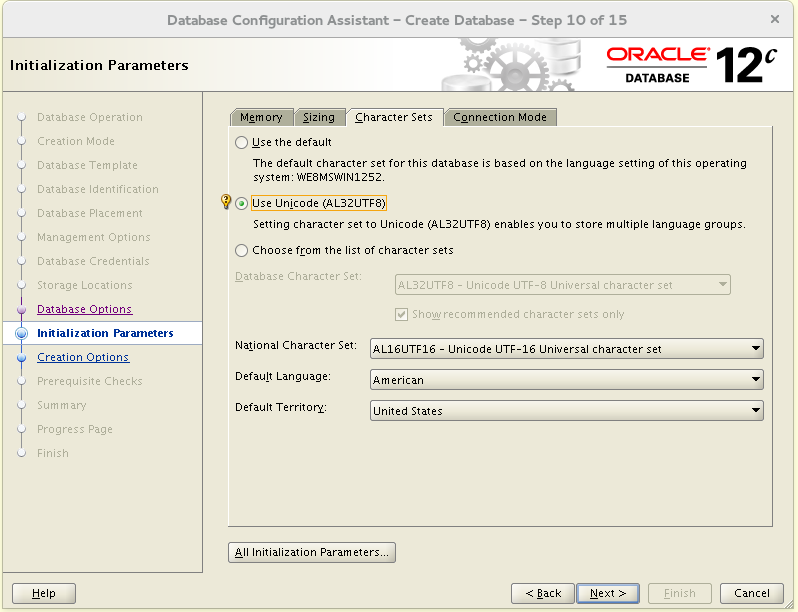

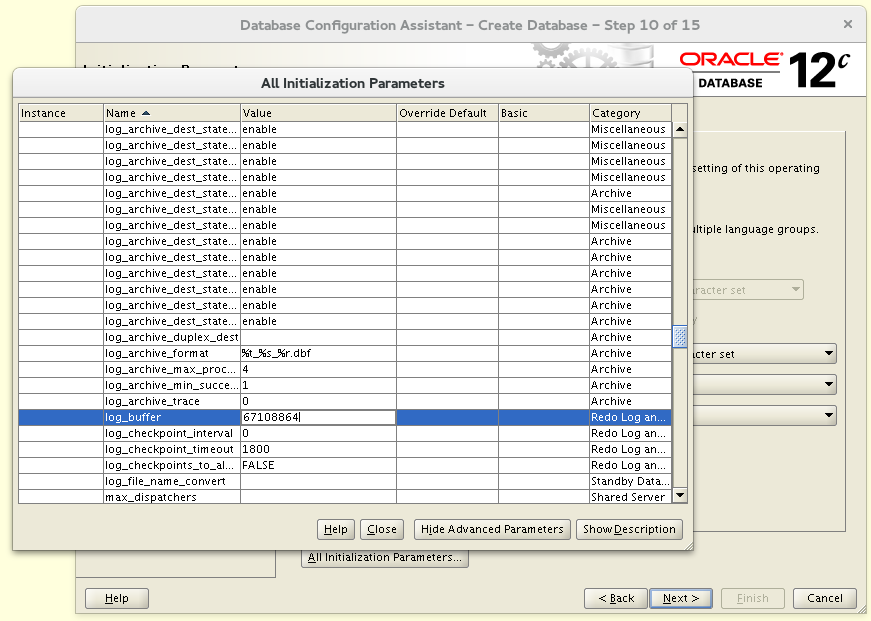

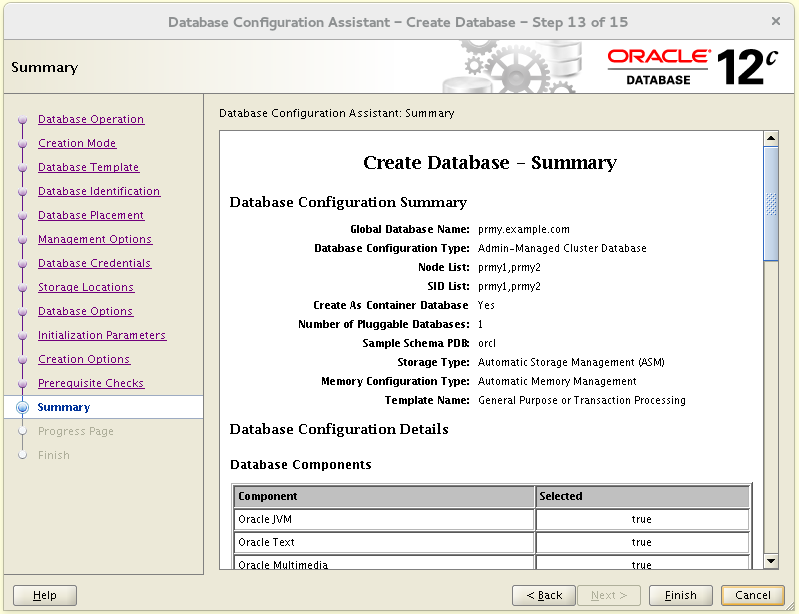

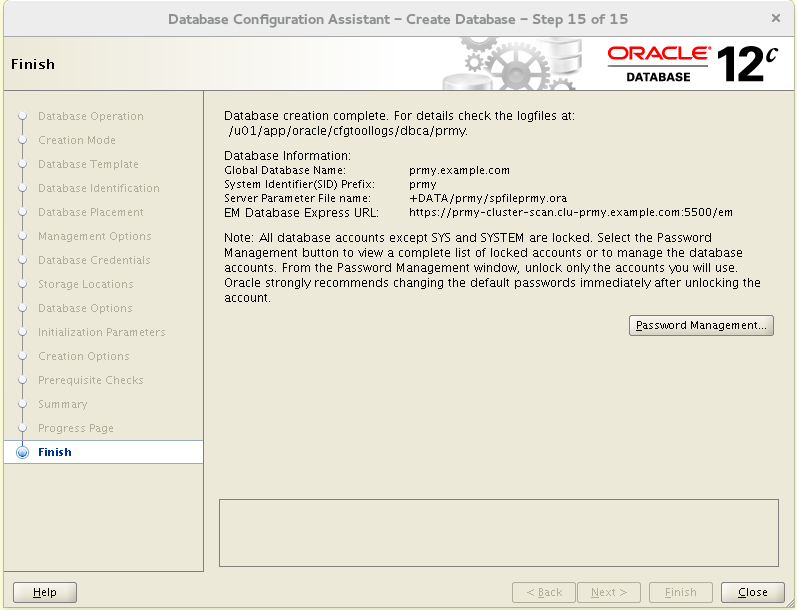

[oracle@prmy1- ~]$ . oraenv ORACLE_SID = [oracle] ? prmy ORACLE_HOME = [/home/oracle] ? /u01/app/oracle/acfsmounts/acfs_db1 The Oracle base has been set to /u01/app/oracle [oracle@prmy1-prmy ~]$ dbca

[oracle@prmy1-prmy ~]$ cd $ORACLE_HOME/dbs [oracle@prmy1-prmy dbs]$ mv initprmy1.ora initprmy.ora [oracle@prmy1-prmy dbs]$ rm initprmy2.ora [oracle@prmy1-prmy dbs]$ ln -s initprmy.ora initprmy1.ora [oracle@prmy1-prmy dbs]$ ln -s initprmy.ora initprmy2.ora Конфигурирование Oracle Net для Data Guard

Конфигурируем основной кластер. Сначала задействуем оставшуюся неиспользованной сеть 192.168.103.0/24 для Data Guard.

[root@prmy1 ~]# . oraenv ORACLE_SID = [root] ? +ASM1 The Oracle base has been set to /u01/app/grid [root@prmy1-+ASM1 ~]# oifcfg setif -global eth3/192.168.103.0:public [root@prmy1-+ASM1 ~]# crsctl stop cluster -all [root@prmy1-+ASM1 ~]# crsctl start cluster -all Проверяем те сети, что уже сконфигурированы:

[root@prmy1-+ASM1 ~]# crsctl stat res -t | grep network ora.net1.network Следующий свободный номер сети -2, конфигурируем ее:

[root@prmy1-+ASM1 ~]# srvctl add network -netnum 2 -subnet 192.168.103.0/255.255.255.0/eth3 -nettype dhcp -verbose Successfully added Network. [root@prmy1-+ASM1 ~]# crsctl stat res -t | grep network ora.net1.network ora.net2.network Добавляем VIP адреса:

[root@prmy1-+ASM1 ~]# srvctl add vip -node prmy1 -netnum 2 [root@prmy1-+ASM1 ~]# srvctl add vip -node prmy2 -netnum 2 [root@prmy1-+ASM1 ~]# srvctl add vip -node prmy3 -netnum 2 Стартуем их:

[root@prmy1-+ASM1 ~]# srvctl start vip -node prmy1 -netnum 2 [root@prmy1-+ASM1 ~]# srvctl start vip -node prmy2 -netnum 2 [root@prmy1-+ASM1 ~]# srvctl start vip -node prmy3 -netnum 2 Добавляем SCAN LISTENER’ы:

[root@prmy1-+ASM1 ~]# srvctl add scan_listener -netnum 2 -listener DG -endpoints TCP:12001 В отличие от тех что в public сети, эти слушают порт 12001.

Добавляем в эту сеть SCAN адрес. Назначение его такое же что и аналогичного SCAN в public сети:

[root@prmy1-+ASM1 ~]# srvctl add scan -scanname prmy-dg-scan -netnum 2 Стартуем их:

[root@prmy1-+ASM1 ~]# srvctl start scan_listener -netnum 2 [root@prmy1-+ASM1 ~]# srvctl start scan -netnum 2 Все что мы сейчас создали должно отобразиться в GNS:

[root@prmy1-+ASM1 ~]# srvctl config gns -list

Net-X-1.oraAsm SRV Target: 192.168.101.103 Protocol: tcp Port: 1522 Weight: 0 Priority: 0 Flags: 0x101

Net-X-2.oraAsm SRV Target: 192.168.101.102 Protocol: tcp Port: 1522 Weight: 0 Priority: 0 Flags: 0x101

Net-X-2.oraAsm SRV Target: 192.168.101.106.sby-cluster Protocol: tcp Port: 1522 Weight: 0 Priority: 0 Flags: 0x101

Net-X-3.oraAsm SRV Target: 192.168.101.101 Protocol: tcp Port: 1522 Weight: 0 Priority: 0 Flags: 0x101

Net-X-3.oraAsm SRV Target: 192.168.101.105.sby-cluster Protocol: tcp Port: 1522 Weight: 0 Priority: 0 Flags: 0x101

Net-X-4.oraAsm SRV Target: 192.168.102.100.sby-cluster Protocol: tcp Port: 1523 Weight: 0 Priority: 0 Flags: 0x101

Net-X-4.oraAsm SRV Target: 192.168.102.103 Protocol: tcp Port: 1523 Weight: 0 Priority: 0 Flags: 0x101

Net-X-5.oraAsm SRV Target: 192.168.102.101 Protocol: tcp Port: 1523 Weight: 0 Priority: 0 Flags: 0x101

Net-X-5.oraAsm SRV Target: 192.168.102.106.sby-cluster Protocol: tcp Port: 1523 Weight: 0 Priority: 0 Flags: 0x101

Net-X-6.oraAsm SRV Target: 192.168.102.102 Protocol: tcp Port: 1523 Weight: 0 Priority: 0 Flags: 0x101

Net-X-6.oraAsm SRV Target: 192.168.102.105.sby-cluster Protocol: tcp Port: 1523 Weight: 0 Priority: 0 Flags: 0x101

Oracle-GNS A 192.168.100.51 Unique Flags: 0x115

prmy-cluster-scan A 192.168.100.127 Unique Flags: 0x81

prmy-cluster-scan A 192.168.100.128 Unique Flags: 0x81

prmy-cluster-scan A 192.168.100.129 Unique Flags: 0x81

prmy-cluster-scan1-net2-vip A 192.168.103.103 Unique Flags: 0x1

prmy-cluster-scan1-vip A 192.168.100.128 Unique Flags: 0x81

prmy-cluster-scan2-net2-vip A 192.168.103.104 Unique Flags: 0x1

prmy-cluster-scan2-vip A 192.168.100.129 Unique Flags: 0x81

prmy-cluster-scan3-net2-vip A 192.168.103.105 Unique Flags: 0x1

prmy-cluster-scan3-vip A 192.168.100.127 Unique Flags: 0x81

prmy-cluster.Oracle-GNS SRV Target: Oracle-GNS Protocol: tcp Port: 42325 Weight: 0 Priority: 0 Flags: 0x115

prmy-cluster.Oracle-GNS TXT CLUSTER_NAME=«prmy-cluster», CLUSTER_GUID=«f1d4ab3f48f1df86bf83ea440ea13327», NODE_NAME=«prmy3», SERVER_STATE=«RUNNING», VERSION=«12.1.0.2.0», DOMAIN=«clu-prmy.example.com» Flags: 0x115

prmy-dg-scan A 192.168.103.103 Unique Flags: 0x1

prmy-dg-scan A 192.168.103.104 Unique Flags: 0x1

prmy-dg-scan A 192.168.103.105 Unique Flags: 0x1

prmy1-2-vip A 192.168.103.100 Unique Flags: 0x1

prmy1-vip A 192.168.100.139 Unique Flags: 0x81

prmy2-2-vip A 192.168.103.101 Unique Flags: 0x1

prmy2-vip A 192.168.100.140 Unique Flags: 0x81

prmy3-2-vip A 192.168.103.102 Unique Flags: 0x1

prmy3-vip A 192.168.100.141 Unique Flags: 0x81

sby-cluster-scan.sby-cluster A 192.168.100.145 Unique Flags: 0x1

sby-cluster-scan.sby-cluster A 192.168.100.149 Unique Flags: 0x1

sby-cluster-scan.sby-cluster A 192.168.100.150 Unique Flags: 0x1

sby-cluster-scan1-vip.sby-cluster A 192.168.100.150 Unique Flags: 0x1

sby-cluster-scan2-vip.sby-cluster A 192.168.100.149 Unique Flags: 0x1

sby-cluster-scan3-vip.sby-cluster A 192.168.100.145 Unique Flags: 0x1

sby1-vip.sby-cluster A 192.168.100.148 Unique Flags: 0x1

sby2-vip.sby-cluster A 192.168.100.151 Unique Flags: 0x1

sby3-vip.sby-cluster A 192.168.100.138 Unique Flags: 0x1

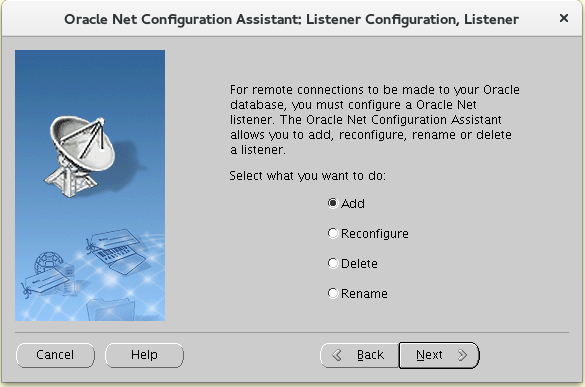

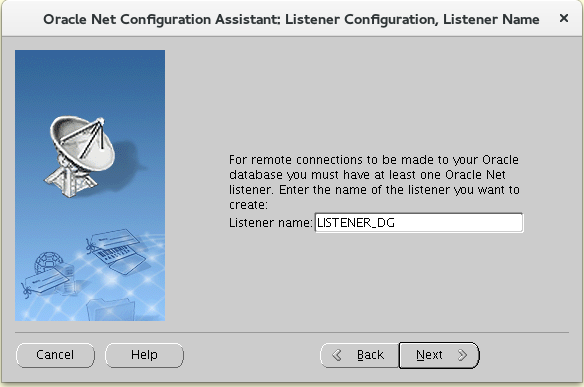

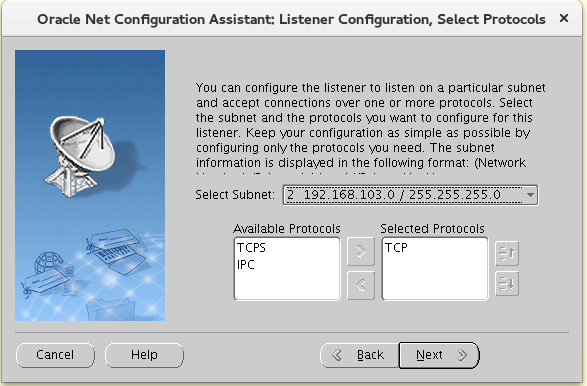

Теперь создадим листенер базы данных в этой сети с названием LISTENER_DG и портом 12001.

[grid@prmy1-+ASM1 ~]$ netca

Этот листенер предназначет исключительно для Data Guard требует дополнительного конфигурирования на каждом узле кластеров и в нем же будет прописан статический сервис для Data Guard.

Конфигурировать буду в 2 шага, сначала через netmgr для того чтобы не ошибиться в синтаксисе:

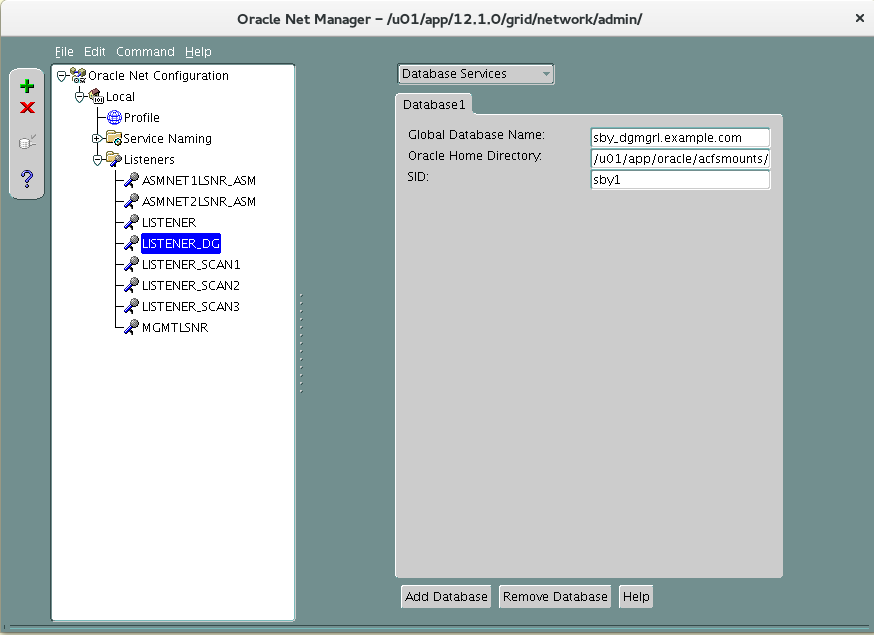

prmy1:

[grid@prmy1-+ASM1 ~]$ netmgr

А потом еще и руками, потому что netmgr не понимает больших значений параметров *_BUF_SIZE.

[grid@prmy1-+ASM1 ~]$ vi $ORACLE_HOME/network/admin/listener.ora LISTENER_DG = (DESCRIPTION = (ADDRESS = (PROTOCOL = IPC)(KEY = LISTENER_DG)(SEND_BUF_SIZE = 10485760)(RECV_BUF_SIZE = 10485760)) ) SID_LIST_LISTENER_DG = (SID_LIST = (SID_DESC = (GLOBAL_DBNAME = prmy_dgmgrl.example.com) (ORACLE_HOME = /u01/app/oracle/acfsmounts/acfs_db1) (SID_NAME = prmy1) ) ) Перезагрузим конфигурацию:

[grid@prmy1-+ASM1 ~]$ lsnrctl reload listener_dg Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER_DG)(SEND_BUF_SIZE=10485760)(RECV_BUF_SIZE=10485760))) The command completed successfully prmy2:

[grid@prmy2-+ASM2 ~]$ netmgr [grid@prmy2-+ASM2 ~]$ vi $ORACLE_HOME/network/admin/listener.ora LISTENER_DG = (DESCRIPTION = (ADDRESS = (PROTOCOL = IPC)(KEY = LISTENER_DG)(SEND_BUF_SIZE = 10485760)(RECV_BUF_SIZE = 10485760)) ) SID_LIST_LISTENER_DG = (SID_LIST = (SID_DESC = (GLOBAL_DBNAME = prmy_dgmgrl.example.com) (ORACLE_HOME = /u01/app/oracle/acfsmounts/acfs_db1) (SID_NAME = prmy2) ) )

[grid@prmy2-+ASM2 ~]$ lsnrctl reload listener_dg Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER_DG)(SEND_BUF_SIZE=10485760)(RECV_BUF_SIZE=10485760))) The command completed successfully prmy3:

[grid@prmy3-+ASM3 ~]$ netmgr [grid@prmy3-+ASM3 ~]$ vi $ORACLE_HOME/network/admin/listener.ora LISTENER_DG = (DESCRIPTION = (ADDRESS = (PROTOCOL = IPC)(KEY = LISTENER_DG)(SEND_BUF_SIZE = 10485760)(RECV_BUF_SIZE = 10485760)) ) SID_LIST_LISTENER_DG = (SID_LIST = (SID_DESC = (GLOBAL_DBNAME = prmy_dgmgrl.example.com) (ORACLE_HOME = /u01/app/oracle/acfsmounts/acfs_db1) (SID_NAME = prmy3) ) )

[grid@prmy3-+ASM3 ~]$ lsnrctl reload listener_dg Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER_DG)(SEND_BUF_SIZE=10485760)(RECV_BUF_SIZE=10485760))) The command completed successfully Для кластера sby процедуру придется повторить:

[root@sby1 ~]# . oraenv ORACLE_SID = [root] ? +ASM1 The Oracle base has been set to /u01/app/grid [root@sby1-+ASM1 ~]# oifcfg setif -global eth3/192.168.103.0:public [root@sby1-+ASM1 ~]# crsctl stop cluster -all [root@sby1-+ASM1 ~]# crsctl start cluster -all [root@sby1-+ASM1 ~]# srvctl add network -netnum 2 -subnet 192.168.103.0/255.255.255.0/eth3 -nettype dhcp -verbose Successfully added Network. [root@sby1-+ASM1 ~]# srvctl add vip -node sby1 -netnum 2 [root@sby1-+ASM1 ~]# srvctl add vip -node sby2 -netnum 2 [root@sby1-+ASM1 ~]# srvctl add vip -node sby3 -netnum 2 [root@sby1-+ASM1 ~]# srvctl start vip -node sby1 -netnum 2 [root@sby1-+ASM1 ~]# srvctl start vip -node sby2 -netnum 2 [root@sby1-+ASM1 ~]# srvctl start vip -node sby3 -netnum 2 [root@sby1-+ASM1 ~]# srvctl add scan_listener -netnum 2 -listener DG -endpoints TCP:12001 [root@sby1-+ASM1 ~]# srvctl add scan -scanname sby-dg-scan -netnum 2 [root@sby1-+ASM1 ~]# srvctl start scan_listener -netnum 2 [root@sby1-+ASM1 ~]# srvctl start scan -netnum 2 Смотрим что получилось (выполняем команду на prmy1):

[root@prmy1-+ASM1 ~]# srvctl config gns -list

Net-X-1.oraAsm SRV Target: 192.168.101.103 Protocol: tcp Port: 1522 Weight: 0 Priority: 0 Flags: 0x101

Net-X-2.oraAsm SRV Target: 192.168.101.102 Protocol: tcp Port: 1522 Weight: 0 Priority: 0 Flags: 0x101

Net-X-2.oraAsm SRV Target: 192.168.101.106.sby-cluster Protocol: tcp Port: 1522 Weight: 0 Priority: 0 Flags: 0x101

Net-X-3.oraAsm SRV Target: 192.168.101.101 Protocol: tcp Port: 1522 Weight: 0 Priority: 0 Flags: 0x101

Net-X-3.oraAsm SRV Target: 192.168.101.105.sby-cluster Protocol: tcp Port: 1522 Weight: 0 Priority: 0 Flags: 0x101

Net-X-4.oraAsm SRV Target: 192.168.102.100.sby-cluster Protocol: tcp Port: 1523 Weight: 0 Priority: 0 Flags: 0x101

Net-X-4.oraAsm SRV Target: 192.168.102.103 Protocol: tcp Port: 1523 Weight: 0 Priority: 0 Flags: 0x101

Net-X-5.oraAsm SRV Target: 192.168.102.101 Protocol: tcp Port: 1523 Weight: 0 Priority: 0 Flags: 0x101

Net-X-5.oraAsm SRV Target: 192.168.102.106.sby-cluster Protocol: tcp Port: 1523 Weight: 0 Priority: 0 Flags: 0x101

Net-X-6.oraAsm SRV Target: 192.168.102.102 Protocol: tcp Port: 1523 Weight: 0 Priority: 0 Flags: 0x101

Net-X-6.oraAsm SRV Target: 192.168.102.105.sby-cluster Protocol: tcp Port: 1523 Weight: 0 Priority: 0 Flags: 0x101

Oracle-GNS A 192.168.100.51 Unique Flags: 0x115

prmy-cluster-scan A 192.168.100.127 Unique Flags: 0x81

prmy-cluster-scan A 192.168.100.128 Unique Flags: 0x81

prmy-cluster-scan A 192.168.100.129 Unique Flags: 0x81

prmy-cluster-scan1-net2-vip A 192.168.103.103 Unique Flags: 0x1

prmy-cluster-scan1-vip A 192.168.100.128 Unique Flags: 0x81

prmy-cluster-scan2-net2-vip A 192.168.103.104 Unique Flags: 0x1

prmy-cluster-scan2-vip A 192.168.100.129 Unique Flags: 0x81

prmy-cluster-scan3-net2-vip A 192.168.103.105 Unique Flags: 0x1

prmy-cluster-scan3-vip A 192.168.100.127 Unique Flags: 0x81

prmy-cluster.Oracle-GNS SRV Target: Oracle-GNS Protocol: tcp Port: 42325 Weight: 0 Priority: 0 Flags: 0x115

prmy-cluster.Oracle-GNS TXT CLUSTER_NAME=«prmy-cluster», CLUSTER_GUID=«f1d4ab3f48f1df86bf83ea440ea13327», NODE_NAME=«prmy3», SERVER_STATE=«RUNNING», VERSION=«12.1.0.2.0», DOMAIN=«clu-prmy.example.com» Flags: 0x115

prmy-dg-scan A 192.168.103.103 Unique Flags: 0x1

prmy-dg-scan A 192.168.103.104 Unique Flags: 0x1

prmy-dg-scan A 192.168.103.105 Unique Flags: 0x1

prmy1-2-vip A 192.168.103.100 Unique Flags: 0x1

prmy1-vip A 192.168.100.139 Unique Flags: 0x81

prmy2-2-vip A 192.168.103.101 Unique Flags: 0x1

prmy2-vip A 192.168.100.140 Unique Flags: 0x81

prmy3-2-vip A 192.168.103.102 Unique Flags: 0x1

prmy3-vip A 192.168.100.141 Unique Flags: 0x81

sby-cluster-scan.sby-cluster A 192.168.100.145 Unique Flags: 0x1

sby-cluster-scan.sby-cluster A 192.168.100.149 Unique Flags: 0x1

sby-cluster-scan.sby-cluster A 192.168.100.150 Unique Flags: 0x1

sby-cluster-scan1-net2-vip.sby-cluster A 192.168.103.106 Unique Flags: 0x1

sby-cluster-scan1-vip.sby-cluster A 192.168.100.150 Unique Flags: 0x1

sby-cluster-scan2-net2-vip.sby-cluster A 192.168.103.107 Unique Flags: 0x1

sby-cluster-scan2-vip.sby-cluster A 192.168.100.149 Unique Flags: 0x1

sby-cluster-scan3-net2-vip.sby-cluster A 192.168.103.108 Unique Flags: 0x1

sby-cluster-scan3-vip.sby-cluster A 192.168.100.145 Unique Flags: 0x1

sby-dg-scan.sby-cluster A 192.168.103.106 Unique Flags: 0x1

sby-dg-scan.sby-cluster A 192.168.103.107 Unique Flags: 0x1

sby-dg-scan.sby-cluster A 192.168.103.108 Unique Flags: 0x1

sby1-2-vip.sby-cluster A 192.168.103.109 Unique Flags: 0x1

sby1-vip.sby-cluster A 192.168.100.148 Unique Flags: 0x1

sby2-2-vip.sby-cluster A 192.168.103.110 Unique Flags: 0x1

sby2-vip.sby-cluster A 192.168.100.151 Unique Flags: 0x1

sby3-2-vip.sby-cluster A 192.168.103.111 Unique Flags: 0x1

sby3-vip.sby-cluster A 192.168.100.138 Unique Flags: 0x1

Синим цветом помечены записи GNS соответствующие адресам второй сети кластера sby.

Создаем листенер базы данных.

[grid@sby1-+ASM1 ~]$ netca sby1:

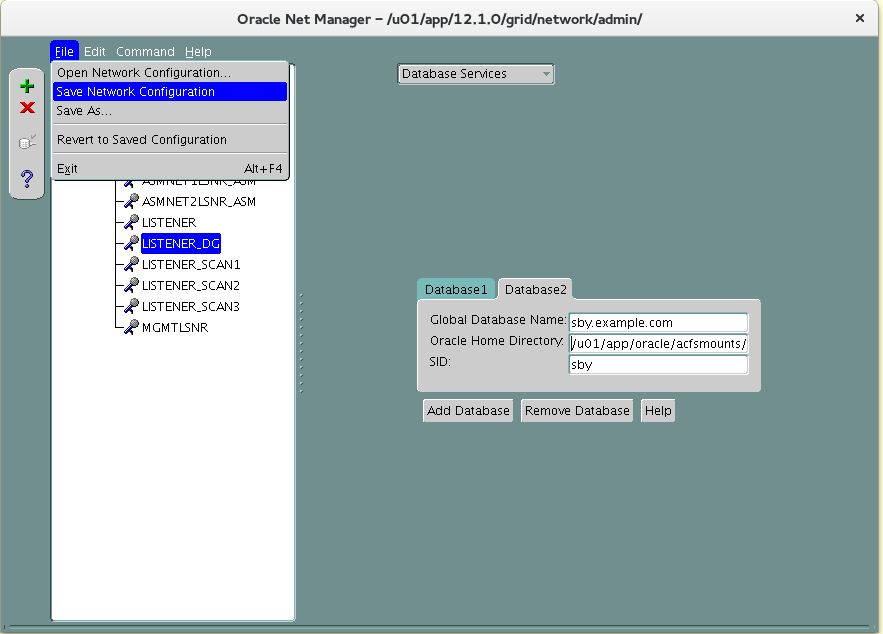

[grid@sby1-+ASM1 ~]$ netmgr

В отличии от кластера prmy здесь нам нужно прописать еще один статический сервис — sby.example.com. Он нам сейчас понадобитсядля клонирования базы данных. После конфигурирования Data Guard Broker этот сервис отсюда нужно удалить.

[grid@sby1-+ASM1 ~]$ vi $ORACLE_HOME/network/admin/listener.ora LISTENER_DG = (DESCRIPTION = (ADDRESS = (PROTOCOL = IPC)(KEY = LISTENER_DG)(SEND_BUF_SIZE = 10485760)(RECV_BUF_SIZE = 10485760)) ) SID_LIST_LISTENER_DG = (SID_LIST = (SID_DESC = (GLOBAL_DBNAME = sby_dgmgrl.example.com) (ORACLE_HOME = /u01/app/oracle/acfsmounts/acfs_db1) (SID_NAME = sby1) ) (SID_DESC = (GLOBAL_DBNAME = sby.example.com) (ORACLE_HOME = /u01/app/oracle/acfsmounts/acfs_db1) (SID_NAME = sby) ) ) [grid@sby1-+ASM1 ~]$ lsnrctl reload listener_dg Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER_DG)(SEND_BUF_SIZE=10485760)(RECV_BUF_SIZE=10485760))) The command completed successfully sby2:

[grid@sby2-+ASM2 ~]$ netmgr [grid@sby2-+ASM2 ~]$ vi $ORACLE_HOME/network/admin/listener.ora LISTENER_DG = (DESCRIPTION = (ADDRESS = (PROTOCOL = IPC)(KEY = LISTENER_DG)(SEND_BUF_SIZE = 10485760)(RECV_BUF_SIZE = 10485760)) ) SID_LIST_LISTENER_DG = (SID_LIST = (SID_DESC = (GLOBAL_DBNAME = sby_dgmgrl.example.com) (ORACLE_HOME = /u01/app/oracle/acfsmounts/acfs_db1) (SID_NAME = sby2) ) ) [grid@sby2-+ASM2 ~]$ lsnrctl reload listener_dg Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER_DG)(SEND_BUF_SIZE=10485760)(RECV_BUF_SIZE=10485760))) The command completed successfully sby3:

[grid@sby3-+ASM3 ~]$ netmgr [grid@sby3-+ASM3 ~]$ vi $ORACLE_HOME/network/admin/listener.ora LISTENER_DG = (DESCRIPTION = (ADDRESS = (PROTOCOL = IPC)(KEY = LISTENER_DG)(SEND_BUF_SIZE = 10485760)(RECV_BUF_SIZE = 10485760)) ) SID_LIST_LISTENER_DG = (SID_LIST = (SID_DESC = (GLOBAL_DBNAME = sbyfs_dgmgrl.example.com) (ORACLE_HOME = /u01/app/oracle/acfsmounts/acfs_db1) (SID_NAME = sbyfs1) ) ) [grid@sby3-+ASM3 ~]$ lsnrctl reload listener_dg Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER_DG)(SEND_BUF_SIZE=10485760)(RECV_BUF_SIZE=10485760))) The command completed successfully Теперь нужно сконфигурировать tnsnames.ora.

[oracle@prmy1- ~]$ . oraenv ORACLE_SID = [oracle] ? prmy The Oracle base has been set to /u01/app/oracle [oracle@prmy1-prmy ~]$ vi $ORACLE_HOME/network/admin/tnsnames.ora

PRODSERV1 = (DESCRIPTION = (FAILOVER=on) (ADDRESS_LIST = (ADDRESS = (PROTOCOL = TCP)(HOST = prmy-cluster-scan.clu-prmy.example.com)(PORT = 1521)) (ADDRESS = (PROTOCOL = TCP)(HOST = sby-cluster-scan.sby-cluster.clu-prmy.example.com)(PORT = 1521)) ) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = prodserv.example.com) ) ) PRMY = (DESCRIPTION = (ADDRESS_LIST = (ADDRESS = (PROTOCOL = TCP)(HOST = prmy-cluster-scan.clu-prmy.example.com)(PORT = 1521)) ) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = prmy.example.com) ) ) SBY = (DESCRIPTION = (ADDRESS_LIST = (ADDRESS = (PROTOCOL = TCP)(HOST = sby-cluster-scan.sby-cluster.clu-prmy.example.com)(PORT = 1521)) ) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = sby.example.com) ) ) PRMYFS = (DESCRIPTION = (ADDRESS_LIST = (ADDRESS = (PROTOCOL = TCP)(HOST = prmy-cluster-scan.clu-prmy.example.com)(PORT = 1521)) ) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = prmyfs.example.com) ) ) SBYFS = (DESCRIPTION = (ADDRESS_LIST = (ADDRESS = (PROTOCOL = TCP)(HOST = sby-cluster-scan.sby-cluster.clu-prmy.example.com)(PORT = 1521)) ) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = sbyfs.example.com) ) ) PRMY_DG = (DESCRIPTION = (ADDRESS_LIST = (ADDRESS = (PROTOCOL = TCP)(HOST = prmy-dg-scan.clu-prmy.example.com)(PORT = 12001)) ) (SDU = 65535) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = prmy.example.com) ) ) PRMYFS_DG = (DESCRIPTION = (ADDRESS_LIST = (ADDRESS = (PROTOCOL = TCP)(HOST = prmy-dg-scan.clu-prmy.example.com)(PORT = 12001)) ) (SDU = 65535) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = prmyfs.example.com) ) ) SBY_DG = (DESCRIPTION = (ADDRESS_LIST = (ADDRESS = (PROTOCOL = TCP)(HOST = sby-dg-scan.sby-cluster.clu-prmy.example.com)(PORT = 12001)) ) (SDU = 65535) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = sby.example.com) ) ) SBYFS_DG = (DESCRIPTION = (ADDRESS_LIST = (ADDRESS = (PROTOCOL = TCP)(HOST = sby-dg-scan.sby-cluster.clu-prmy.example.com)(PORT = 12001)) ) (SDU = 65535) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = sbyfs.example.com) ) ) PRMY1_LOCAL_NET1 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = prmy1-vip.clu-prmy.example.com)(PORT = 1521)) ) PRMY1_LOCAL_NET2 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = prmy1-2-vip.clu-prmy.example.com)(PORT = 12001)) ) PRMY2_LOCAL_NET1 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = prmy2-vip.clu-prmy.example.com)(PORT = 1521)) ) PRMY2_LOCAL_NET2 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = prmy2-2-vip.clu-prmy.example.com)(PORT = 12001)) ) PRMY3_LOCAL_NET1 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = prmy3-vip.clu-prmy.example.com)(PORT = 1521)) ) PRMY3_LOCAL_NET2 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = prmy3-2-vip.clu-prmy.example.com)(PORT = 12001)) ) SBY1_LOCAL_NET1 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = sby1-vip.sby-cluster.clu-prmy.example.com)(PORT = 1521)) ) SBY1_LOCAL_NET2 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = sby1-2-vip.sby-cluster.clu-prmy.example.com)(PORT = 12001)) (SDU = 65535) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = sby.example.com) ) ) SBY2_LOCAL_NET1 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = sby2-vip.sby-cluster.clu-prmy.example.com)(PORT = 1521)) ) SBY2_LOCAL_NET2 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = sby2-2-vip.sby-cluster.clu-prmy.example.com)(PORT = 12001)) ) SBY3_LOCAL_NET1 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = sby3-vip.sby-cluster.clu-prmy.example.com)(PORT = 1521)) ) SBY3_LOCAL_NET2 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = sby3-2-vip.sby-cluster.clu-prmy.example.com)(PORT = 12001)) ) REMOTE_NET1 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = prmy-cluster-scan.clu-prmy.example.com)(PORT = 1521)) (ADDRESS = (PROTOCOL = TCP)(HOST = sby-cluster-scan.sby-cluster.clu-prmy.example.com)(PORT = 1521)) ) REMOTE_NET2 = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = prmy-dg-scan.clu-prmy.example.com)(PORT = 12001)) (ADDRESS = (PROTOCOL = TCP)(HOST = sby-dg-scan.sby-cluster.clu-prmy.example.com)(PORT = 12001)) )

[oracle@prmy1-prmy ~]$ scp $ORACLE_HOME/network/admin/tnsnames.ora sby1:$ORACLE_HOME/network/admin

Подготовка к клонированию основной базы данных

[oracle@prmy1-prmy ~]$ srvctl status database -d prmy Instance prmy1 is running on node prmy1 Instance prmy2 is running on node prmy2 [oracle@prmy1-prmy ~]$ export ORACLE_SID=prmy1 [oracle@prmy1-prmy1 ~]$ srvctl stop database -d prmy Включаем архивирование и заодно Flashback Database.

[oracle@prmy1-prmy1 ~]$ sqlplus / as sysdba Connected to an idle instance. SQL> startup mount ORACLE instance started. Total System Global Area 1048576000 bytes Fixed Size 2932336 bytes Variable Size 717226384 bytes Database Buffers 322961408 bytes Redo Buffers 5455872 bytes Database mounted. SQL> alter database archivelog; Database altered. SQL> alter database flashback on; Database altered. SQL> shutdown immediate ORA-01109: database not open Database dismounted. ORACLE instance shut down. SQL> exit [oracle@prmy1-prmy1 ~]$ srvctl start database -d prmy [oracle@prmy1-prmy1 ~]$ sqlplus / as sysdba SQL> alter database force logging; SQL> select GROUP#,BYTES,MEMBERS,THREAD# from v$log; GROUP# BYTES MEMBERS THREAD# ---------- ---------- ---------- ---------- 1 52428800 2 1 2 52428800 2 1 3 52428800 2 2 4 52428800 2 2 SQL> alter database add standby logfile thread 1 ('+DATA', '+FRA' ) size 52428800; SQL> alter database add standby logfile thread 1 ('+DATA', '+FRA' ) size 52428800; SQL> alter database add standby logfile thread 2 ('+DATA', '+FRA' ) size 52428800; SQL> alter database add standby logfile thread 2 ('+DATA', '+FRA' ) size 52428800; SQL> alter system set log_archive_dest_1='location=USE_DB_RECOVERY_FILE_DEST valid_for=(ALL_LOGFILES,ALL_ROLES) db_unique_name=prmy' scope=both sid ='*'; SQL> alter system set log_archive_dest_state_1='enable' scope=both sid ='*'; SQL> alter system set fal_server='sby' scope=both sid='*'; SQL> alter system set log_archive_max_processes=4 scope=both sid ='*'; SQL> alter system set log_archive_config='dg_config=(prmy,prmyfs,sby,sbyfs)' scope=both sid ='*'; SQL> alter system set standby_file_management='auto' scope=both sid ='*'; SQL> alter pluggable database all open; SQL> alter pluggable database all save state;

Подготовка резервной базы данных

[oracle@sby1- ~]$ mkdir -p /u01/app/oracle/admin/sby/adump [oracle@sby1- ~]$ ssh sby2 mkdir -p /u01/app/oracle/admin/sby/adump [oracle@sby1- ~]$ ssh sby3 mkdir -p /u01/app/oracle/admin/sbyfs/adump [oracle@sby1- ~]$ . oraenv ORACLE_SID = [oracle] ? sby ORACLE_HOME = [/home/oracle] ? /u01/app/oracle/acfsmounts/acfs_db1 The Oracle base has been set to /u01/app/oracle Создаем временный файл параметров.

[oracle@sby1-sby ~]$ echo 'DB_NAME=sby' > $ORACLE_HOME/dbs/initsby.ora [oracle@sby1-sby ~]$ echo 'DB_DOMAIN=example.com' >> $ORACLE_HOME/dbs/initsby.ora Создаем временный файл паролей, его заменит на реальный команда DUPLICATE.

[oracle@sby1-sby ~]$ orapwd file=$ORACLE_HOME/dbs/orapwsby password=oracle_4U Стартуем экземпляр:

[oracle@sby1-sby ~]$ sqlplus / as sysdba SQL> startup nomount pfile='$ORACLE_HOME/dbs/initsby.ora' ORACLE instance started. Total System Global Area 243269632 bytes Fixed Size 2923000 bytes Variable Size 184550920 bytes Database Buffers 50331648 bytes Redo Buffers 5464064 bytes SQL> exit

Клонирование базы данных

Готовим скрипт для RMAN. Не забываем что база данных у нас кластерная.

[oracle@prmy1-prmy ~]$ vi cr_phys_sby.txt run { allocate channel prmy1 type disk; allocate auxiliary channel sby1 type disk; duplicate target database for standby from active database spfile parameter_value_convert 'prmy','sby' set db_unique_name='sby' set control_files='+DATA','+FRA' set log_archive_config='dg_config=(prmy,prmyfs,sby,sbyfs)' set fal_server='prmy' set log_archive_dest_1='location=USE_DB_RECOVERY_FILE_DEST valid_for=(ALL_LOGFILES,ALL_ROLES) db_unique_name=sby' set instance_number='1' set thread='1' set undo_tablespace='UNDOTBS1'; } Стартуем скрипт:

[oracle@prmy1-prmy ~]$ rman target sys/oracle_4U@prmy auxiliary sys/oracle_4U@sby1_local_net2 RMAN> @cr_phys_sby.txt